PostgreSQL comes with an excellent set of features, unmatched in the open-source RDBMS space. It is mostly easy to learn and use, especially for application developers. However, some parts are, well, simply not easy. They require work to setup and get right, and are typically mission-critical too.

Connection Management

PostgreSQL launches a new process, called a backend process, to handle each connection. This is in contrast to modern, eventloop/threadpool based connection handling architectures found in other comparable server software. Spawning a full process takes more time and resources, and manifests as increased query latencies in applications where connections are opened and closed at a high rate.

In most deployments, pooling of connections is required at some level. At an application level, this can be using your programming language/library features. For example sql/DB.SetMaxIdleConns can be used to increase connection reuse from within a single Go application.

Often though, you’ll have to use a third-party connection pooling or load balancing solution. Connection poolers maintain a pool of idle connections to the upstream Postgres server, which are assigned and proxied to incoming client connections. They typically parse the SQL sent by clients to recognize transaction boundaries and data-modifying DMLs to implement features like transaction-level connection pooling and read replicas.

PgBouncer is a popular, lightweight, single-binary connection pooler and is often run alongside PostgreSQL in the same system.

PgPool is more versatile than PgBouncer. It can also do load balancing and replication for instance.

Connection pooling brings its own set of headaches though. Firstly, it is an additional moving part that has be maintained in your deployment. Setting up authentication is also a pain if you have clients that use different credentials or authentication mechanisms. Some connection-level features like LISTEN/NOTIFY, prepared statements, temporary tables and the like might require extra configuration or client-side changes to work.

Zero Downtime Upgrades

Upgrading PostgreSQL between minor versions (13.x -> 13.y) involves installation of the new package and restarting the server process. While restarting the server process will necessarily disrupt all connected clients, it is still a reasonable ask, given that the downtime is bound to the duration of a service restart.

Upgrading between major (12.x -> 13.y) versions, however, is a much bigger deal. Typically, the more data there is, the more painful the process is.

The simplest method, that works only for low amounts of data (say tens of GBs), is to dump the data from the old version and restore it into a new-version server. Another option is to use pg_upgrade, which requires an orchestrated dance involving binaries of both version of Postgres.

In either case, the databases would be down for a considerable amount of time.

Ideally, it should be possible to replicate to a new version server, and promote the new version server as the primary. However, it is not possible to do streaming replication to a standby server with a different major version. Logical replication, while it looks well-suited for the job, has certain gotchas that need to be worked around to ensure complete replication.

Most HA solutions for Postgres rely on streaming replication, and therefore you cannot upgrade nodes in a cluster one at a time.

Current state of the art would be to use logical replication, while working around the limitations of logical replication, and possibly involving restricting features that applications can use (like DDLs) during the upgrade phase.

High Availability

PostgreSQL comes with all the low-level features required to build an HA solution: replication with feedback, cascading replication, synchronous replication, standbys, hot standbys, standby promotion and so on. It does not however, actually provide an HA solution out of the box. There are no frameworks or tools to monitor health and automatically failover to a standby. There is no notion of a multi-node HA cluster.

You’ll have to setup and run a third-party solution for creating high availability Postgres deployments. Current favorites are pg_auto_failover and Patroni. While Patroni relies on an existing highly-available configuration store like ZooKeeper or etcd, pg_auto_failover can make do without one.

Evaluating, deploying and testing one of these in production takes time and effort. Monitoring, alerting and ops playbooks have to be setup and maintained.

Bloat Management

PostgreSQL’s MVCC architecture means that no data is ever overwritten – modifying a row only results in a new version of the row being written out to disk. Deleting a row only means recording that the row is invisible to future transactions. When a row version is inaccessible from any ongoing or future transactions it is no longer of any use, and is termed “bloat”. The process of garbage-collecting this bloat is called “vacuum”.

Bloat is invisible to applications, and becomes solely the DBA’s headache. For update-heavy tables, monitoring and managing bloat is a non-trivial issue. The autovacuum process helps a lot, but its thresholds may need to be tuned at a global or per-table level to ensure table sizes do not grow unmanageably large.

Indexes are also affected by bloat, and autovacuum does not help here. Deletion of rows and update of indexed columns lead to dead entries in indexes. Update-heavy workloads with upates to indexed columns can lead to constantly growing and inefficient indexes. There is no equivalent of vacuum for indexes. The only solution is to rebuild the whole index using REINDEX or use VACUUM FULL on the the table.

Other than a single value per table (pg_stat_all_tables.n_dead_tup), Postgres offers nothing in the way of estimating the bloat in a table, and nothing at all for indexes. The most practical way still remains executing a scary-looking query from check_postgres.

pgmetrics incorporates the query from check_postgres, and can produce JSON and CSV format output that includes size and bloat information for all tables and indexes; which can be fed into monitoring or automation tools.

pg_repack is a popular alternative to VACUUM FULL – it can do the same job but without locks. If you’re forced to do VACUUM FULL regularly, it is a must-investigate tool.

zheap is the new storage engine for Postgres that has been in development for years, which promises to reduce bloat through in-place updates.

Query Plan Management

Core PostgreSQL offers only two rudimentary tools in this space:

- pg_stat_statements extension for query analytics – this gives the total and averages of query planning and execution times, disk and memory usage

- auto_explain extension, which can print query execution plans into the Postgres log destination

While the stats provided by pg_stat_statements are just enough to get by, using auto_explain to force plans into log files and then extracting them is not really more than a hack especially compared to commercial competitors to Postgres, who offer plan history, baselining and management features.

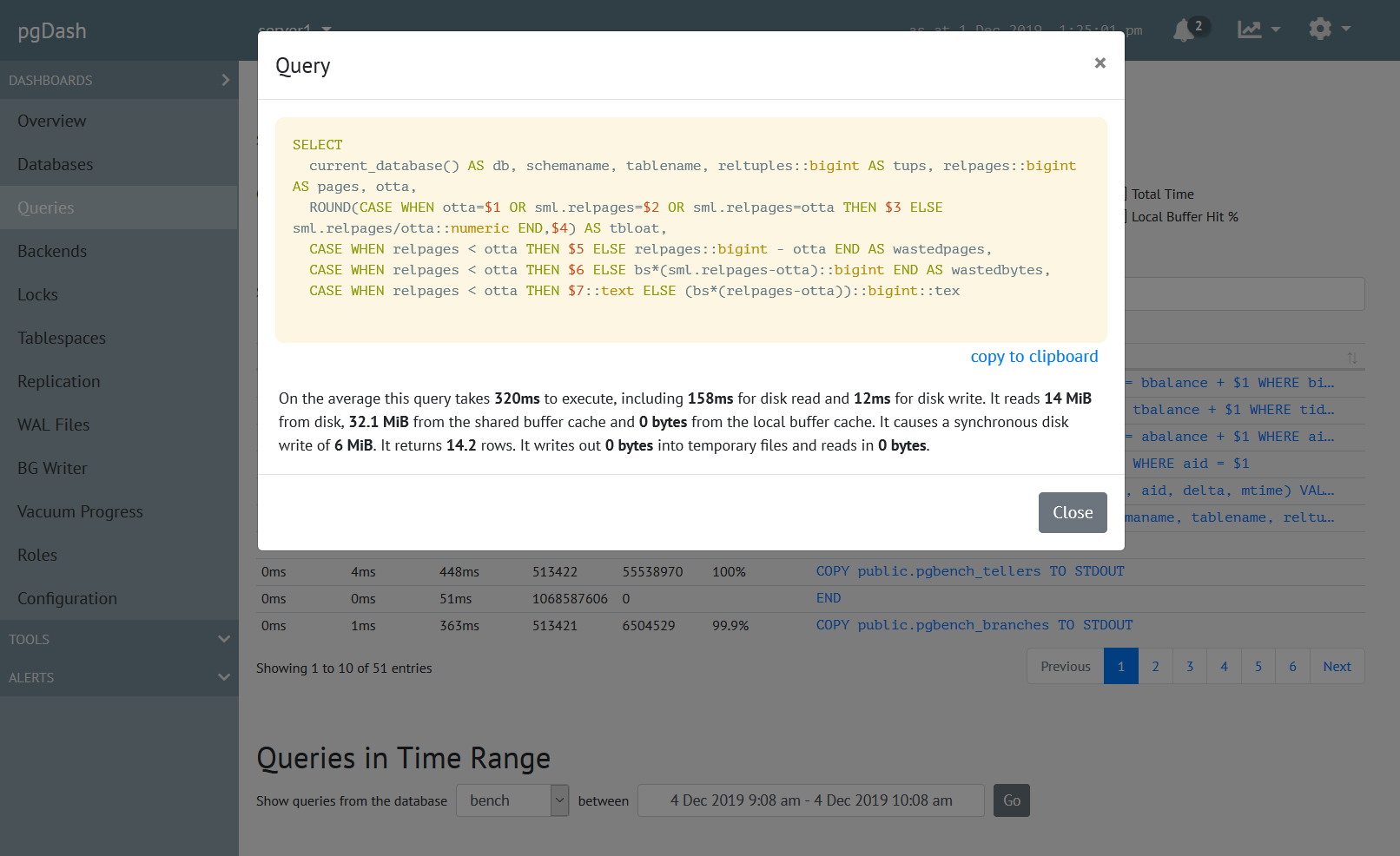

The current state of the art with Postgres is to mine the log file for query plans and store them elsewhere. But perhaps the most handicapping problem is not being able to associate the query plan with the corresponding analytics from pg_stat_statements. The way pgDash does this is to parse both the SQL query texts from pg_stat_statements and auto_explain output, adjust for the mangling done by pg_stat_statements, and try to match the two. It requires a full PostgreSQL-dialect SQL parser.

Baselining, setting policies for plan selection, etc. are just not possible in core PostgreSQL currently.

There are a few extensions out there that are basically improved versions of pg_stat_statements, but the extra steps involved in using a third party extension makes it a challenge for most people, especially if they’re using a managed Postgres provider.

Tuning

PostgreSQL has a plethora of tuning options, starting from the under-configured-by-default shared_buffers setting. Some are easy to understand and set, like the number of parallel workers for various operations (max_worker_processes, max_parallel_* etc). Others are a bit obscure (wal_compression, random_page_cost etc) but generally beneficial. The most vexing ones though, are the ones that need quantifiable information about the workload.

For example, if work_mem is too low, queries might use temporary disk files; if too high, and there are enough simultaneous queries, Postgres backend processes might be OOM-killed. So how do you figure out what number to set it to?

Practically, especially with OLTP workloads and web-application workloads, it is impossible to predict what the peak memory demand for queries would be. Your best bet is to set it a reasonable value, then monitor queries to see if any of them could have benefitted from a higher value of work_mem.

And how do you do that? You’ll need to get the extension auto_explain to log the query execution plans of each query, extract those from Postgres’ log files, examine each query plan to see if it uses disk-based external merges or a bitmap heap scan with lossy heap blocks.

Not impossible, just hard.

About pgDash

pgDash is a modern, in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics.

pgDash provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, diagnostics, alerting, teams and more. Checkout the features here or signup today for a free trial.