PostgreSQL supports the standard transaction isolation levels as specified by ANSI SQL, with a default level of Read Committed. There are, however, fairly serious implications to this choice. At the very least, it is important to understand the consequences of the choice of isolation level for your application.

Read on to learn more.

Isolation, Concurrency and Anomalies

Modern databases are expected to perform consistently, correctly and even efficiently when multiple clients carry out multiple transactions simultaneously. Such concurrency is possible only if transactions do not trod on each other – that is, there is some amount of isolation between them.

Consider a situation where an ongoing transaction has inserted a row in a table. If now a second transaction sees this row through a SELECT query, and takes decisions based on the contents of this row, this might in turn lead to data inconsistency or business logic errors. This is an example of an anomaly. This particular type of anomaly (reading of uncommitted rows) is called a phantom read or dirty read.

We could conclude that this anomaly occurred because there was insufficient isolation between the transactions. This, and other anomalies, could be prevented by perfectly isolating transactions from each other. However, it turns out perfect isolation while supporting concurrency is expensive, and may reduce the database system throughput to unacceptable levels. The graded isolation levels specified by the standard represent the trade-off between isolation and performance. An isolation level of read uncommitted would allow a dirty read, but not the higher levels of read committed, repeatable read or serializable.

And there are still further complications: there are well-documented anomalies not covered in the standard, there is no theoretically exhaustive list of possible anomalies, and individual DBMS architectures and locking implementations have their own quirky behavior. Pragmatically, the best option is to experiment with, learn and understand your own DBMS’ behavior well enough to avoid the traps that anomalies set for the unwary.

The Three Isolation Levels in PostgreSQL

By virtue of its architecture, PostgreSQL cannot go below the read committed isolation level (thereby avoiding anomalies like dirty read mentioned above). The other two higher isolation levels it supports are, in order, repeatable read and serializable.

Practically, repeatable read prevents some anomalies that are allowed at read uncommitted, whereas serializable is a whole different beast and provides almost perfect isolation.

Arguably, the default isolation level of PostgreSQL should have been the one that provides maximum isolation (“serializable”) — similar to how other default settings tend to maximize data durability — but it is, unfortunately, the one that allows maximum anomalies (“read committed”). Caveat emptor.

Let’s meet some anomalies that can happen at various levels.

Read Committed Level

At this level a transaction does not see the rows modified or inserted by another as-yet-unfinished transaction. However, it does mean that if another transaction starts and commits changes while the first transaction is ongoing, the first transaction can see the data both before and after the second transaction’s changes.

This means that if within a transaction you do a SELECT, and then do the exact SELECT again later within the same transaction, there is no guarantee that the results will be the same. Have a look at one transaction trying to get a leaderboard of players based on XP, while another transaction increments a certain player’s XP concurrently:

The second SELECT returns a different result than the first, even though the data was not modified by the first transaction at all. This anomaly is called non-repeatable read.

Here’s another anomaly, called a read skew. Assume a transaction wants to grant 10% extra XP to all players who have at least 5000 XP. As PostgreSQL runs this transaction, it encounters a row that has been locked for update by another transaction. It waits for the lock, re-reads the row, updates it and continues. It seems logical, but the consequences may be surprising:

At start, Bob has 5000 XP and the first transaction reduces it to 4000. Concurrently, the second transaction grants 10% more XP to all players with 5000 XP or more. Intuitively, we expect either Bob to be excluded from the grant because the first transaction happens first (XP is 4000 finally) or Bob to be granted the bonus because the second transaction happens first (XP is 5500 finally). So how do you explain the final amount of 4400 ?!

Repeatable Read Level

Raising the isolation level of a transaction from read committed to repeatable

read prevents the anomalies described above. To raise the isolation level, start

the transaction with a BEGIN ISOLATION LEVEL REPEATABLE READ statement, or use

SET TRANSACTION ISOLATION LEVEL REPEATABLE READ, or change the default transaction

isolation level using the configuration setting default_transaction_isolation.

Running the first example (non-repeatable read) at this isolation level will result in the second SELECT returning the same set of rows as the first SELECT. Running the second example (read skew) will result in the UPDATE in the second transaction erroring out with:

ERROR: could not serialize access due to concurrent update

Now assume players can enroll in guilds, with a limit of 10 players per guild. Consider a transaction adding a player into a guild. It has to first count the number of players in the guild currently, then add the player into it if the count is not already 10. What happens if two such transaction run concurrently and the count is 9?

We now have a guild with 11 players, even though both transactions completed successfully without any application logic or PostgreSQL errors. Even if the transaction checked the count again before committing, it would not be able to detect this situation!

This anomaly is called a write skew. Had the transactions run at the serializable isolation level, the transaction committing second would have errored out with:

ERROR: could not serialize access due to read/write dependencies among transactions

DETAIL: Reason code: Canceled on identification as a pivot, during commit attempt.

HINT: The transaction might succeed if retried.

Serializable Level

Under the hood, the serializable isolation level in PostgreSQL is implemented using a sophisticated, still-state-of-the-art algorithm called Serializable Snapshot Isolation (SSI). This level prevents all of the anomalies described above. Why not use it all the time then? There are two reasons:

Performance

Although PostgreSQL’s implementation of SSI is possibly one of the most efficient ones out there, it is still more expensive than the other isolation levels. Usually though, if your isolation concerns are important enough, this overhead is a price worth paying.

Serialization Failures

A bigger reason for switching to serializable by default is that transactions can fail for no fault of their own. When Postgres detects concurrent transactions that cannot be serialized, it declares a “serialization failure” and aborts transactions. Transactions that get aborted have to be retried at application level.

Given that most large enterprise applications typically contain layers of database access logic, ORMs, legacy code that is expensive to modify, multiple applications and tools written in different languages and what not, it can be a nightmare to enforce a consistent transaction retry logic in application code. Even for a brand new application, it would be nearly impossible to avoid frameworks and ORMs that have limited or no support for automatic transaction retrying.

Learn More

There is a lot of history and theory behind transaction isolation. This blog post gives a quick overview of the story so far. This chapter in PostgreSQL docs give an overview of the PostgreSQL side of things. The books PostgreSQL 14 Internals and The Internals of PostgreSQL offer more in-depth coverage.

About pgDash

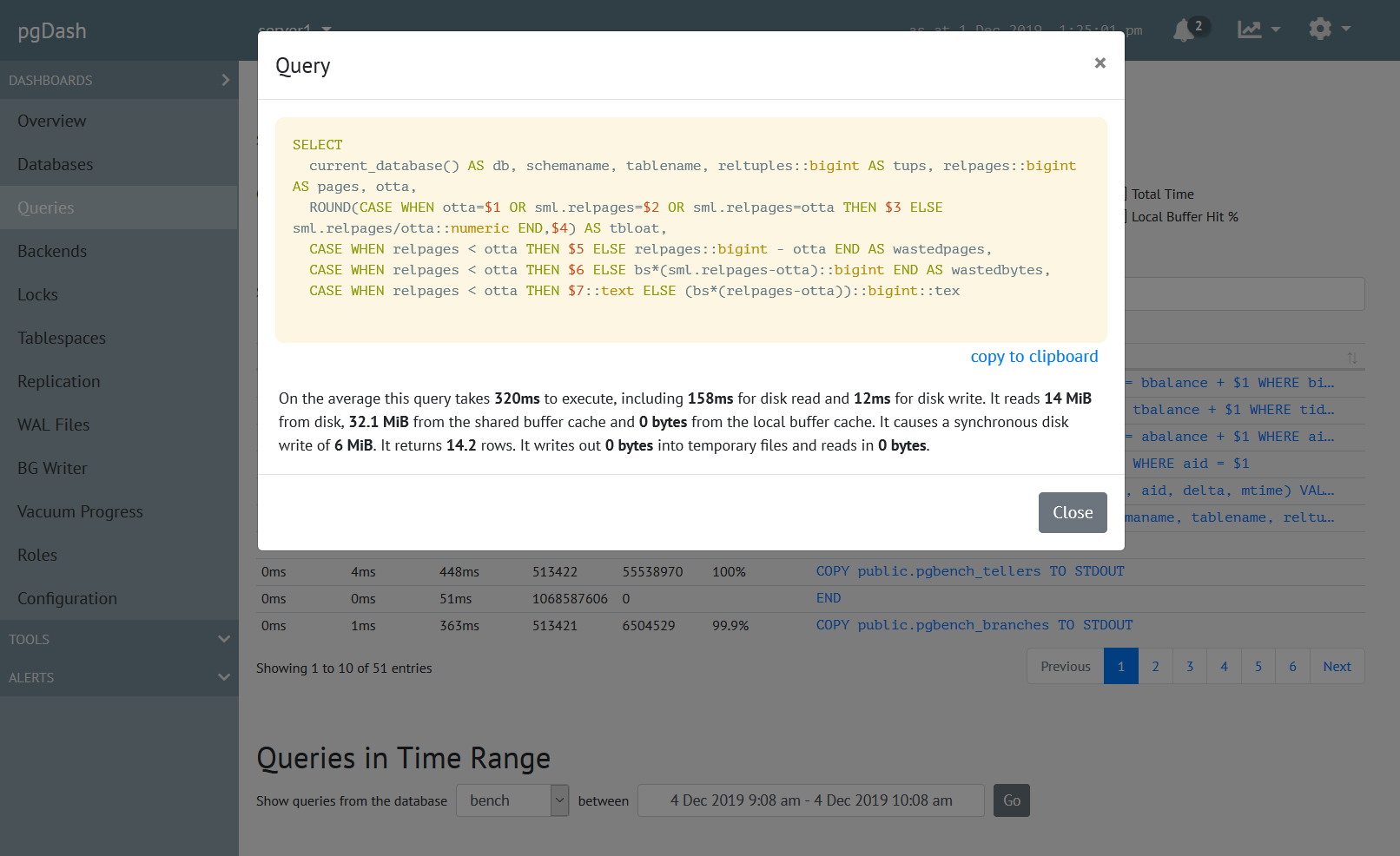

pgDash is a modern, in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics.

pgDash provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, diagnostics, alerting, teams and more. Checkout the features here or signup today for a free trial.