Version 10 of PostgreSQL added the declarative table partitioning feature. In version 11 (currently in beta), you can combine this with foreign data wrappers, providing a mechanism to natively shard your tables across multiple PostgreSQL servers.

Declarative Partitioning

Consider a table that store the daily minimum and maximum temperatures of cities for each day:

CREATE TABLE temperatures (

at date,

city text,

mintemp integer,

maxtemp integer

);The table spec is intentionally devoid of column constraints and primary key to keep things simple – we’ll add these later.

It is very common to find that in many applications the recent-most data is more frequently accessed. Think current financial year, this month, last hour and so on. As our “temperatures” table grows, it makes sense to move out the old data into another table, with the same structure. We can for example, do this:

CREATE TABLE temperatures_2017 (LIKE temperatures);

INSERT INTO temperatures_2017 SELECT * FROM temperatures WHERE

extract(year from at) = 2017;

DELETE FROM temperatures WHERE extract(year from at) = 2017;to move all entries from the year 2017 into another table. This leaves the main “temperatures” table smaller and faster for the application to work with. As a bonus, if you now need to delete old data, you can do so without slowing down inserts of incoming data into the main/current table because the old data lives in another table.

But having multiple, distinct tables means that the application code now has to change. If it has to access older data, say getting the annual min and max temperatures of a city, it now has to find out what tables are present in the schema, query each of them and combine the results from each table. Can we do this without changing the application code?

Partitioning makes this possible. In PostgreSQL 10, you can create the “temperatures” table like this:

CREATE TABLE temperatures (

at date,

city text,

mintemp integer,

maxtemp integer

)

PARTITION BY RANGE (at);This makes “temperatures” a partition master table, and tells PostgreSQL that we’re going to create multiple partitioned tables storing non-overlapping data, each with a different set of “at” values. The master table itself does not hold any data, but can be queried from and inserted to by the application – which is ignorant of the child partitions holding the actual data.

And here are our partitions:

CREATE TABLE temperatures_2017

PARTITION OF temperatures

FOR VALUES FROM ('2017-01-01') TO ('2018-01-01');

CREATE TABLE temperatures_2018

PARTITION OF temperatures

FOR VALUES FROM ('2018-01-01') TO ('2019-01-01');We now have two tables, one that will store data for 2017 and another for 2018. Note that the “from” value is inclusive, but the “to” value is not. Let’s try it out:

temp=# INSERT INTO temperatures (at, city, mintemp, maxtemp)

temp-# VALUES ('2018-08-03', 'London', 63, 90);

INSERT 0 1

temp=# INSERT INTO temperatures (at, city, mintemp, maxtemp)

temp-# VALUES ('2017-08-03', 'London', 59, 70);

INSERT 0 1

temp=# SELECT * FROM temperatures;

at | city | mintemp | maxtemp

------------+--------+---------+---------

2017-08-03 | London | 59 | 70

2018-08-03 | London | 63 | 90

(2 rows)

temp=# SELECT * FROM temperatures_2017;

at | city | mintemp | maxtemp

------------+--------+---------+---------

2017-08-03 | London | 59 | 70

(1 row)

temp=# SELECT * FROM temperatures_2018;

at | city | mintemp | maxtemp

------------+--------+---------+---------

2018-08-03 | London | 63 | 90

(1 row)The “application” is able to insert into and select from the main table, but PostgreSQL routes the actual data into the appropriate child tables. (Oh and BTW, those temperatures are real!)

Indexes and Constraints

Indexes and table and column constraints are actually defined at the partition table level, since that’s where the actual data resides. You can set these during the partition table creation:

CREATE TABLE temperatures_2017

PARTITION OF temperatures (

mintemp NOT NULL,

maxtemp NOT NULL,

CHECK (mintemp <= maxtemp),

PRIMARY KEY (at, city)

)

FOR VALUES FROM ('2017-01-01') TO ('2018-01-01');PostgreSQL 11 lets you define indexes on the parent table, and will create indexes on existing and future partition tables. Read more here.

Foreign Data Wrapper

The foreign data wrapper functionality has existed in Postgres for some time. PostgreSQL lets you access data stored in other servers and systems using this mechanism. What we’re interested in is “postgres_fdw”, which is what will allow us to access one Postgres server from another.

“postgres_fdw” is an extension present in the standard distribution, that can be installed with the regular CREATE EXTENSION command:

CREATE EXTENSION postgres_fdw;Let’s assume you have another PostgreSQL server “box2” with a database called “box2db”. You can create a “foreign server” for this:

CREATE SERVER box2 FOREIGN DATA WRAPPER postgres_fdw

OPTIONS (host 'box2', dbname 'box2db');Let’s also map our user “alice” (the user you’re logged in as) to box2 user “box2alice”. This allows “alice” to be “box2alice” when accessing remote tables:

CREATE USER MAPPING FOR alice SERVER box2

OPTIONS (user 'box2alice');You can now access tables (also views, matviews etc) on box2. First, create a table on box2, and then a “foreign table” on your server. The foreign table does not hold any actual data, but serves as a proxy for accessing the table on box2.

-- on box2

CREATE TABLE foo (a int);

-- on your server

IMPORT FOREIGN SCHEMA public LIMIT TO (foo)

FROM SERVER box2 INTO public;The foreign table in your server can participate in transactions the same way as normal tables. Applications do not have to know that the tables it interacts with are local or foreign – although if your app runs a SELECT which might pull in lots of rows from a foreign table it might slow things down. In Postgres 10, improvements were made for pushing down joins and aggregates to the remote server.

Combining Partitioning and FDW

And now for the fun part: setting up partitions on remote servers.

First, let’s create the physical partition table on box2:

-- on box2

CREATE TABLE temperatures_2016 (

at date,

city text,

mintemp integer,

maxtemp integer

);And then create the partition on your server, as a foreign table:

CREATE FOREIGN TABLE temperatures_2016

PARTITION OF temperatures

FOR VALUES FROM ('2016-01-01') TO ('2017-01-01')

SERVER box2;You can now insert and query from your own server:

temp=# INSERT INTO temperatures (at, city, mintemp, maxtemp)

temp-# VALUES ('2016-08-03', 'London', 63, 73);

INSERT 0 1

temp=# SELECT * FROM temperatures ORDER BY at;

at | city | mintemp | maxtemp

------------+--------+---------+---------

2016-08-03 | London | 63 | 73

2017-08-03 | London | 59 | 70

2018-08-03 | London | 63 | 90

(3 rows)

temp=# SELECT * FROM temperatures_2016;

at | city | mintemp | maxtemp

------------+--------+---------+---------

2016-08-03 | London | 63 | 73

(1 row)There you have it! Being able to insert rows into a remote partition is new in version 11. With this feature, you can now have your data sharded logically (partitions) and physically (FDW).

Data Management

Commands like VACUUM and ANALYZE work as you’d expect with partition master tables – all local child tables are subject to VACUUM and ANALYZE. Partitions can be detached, it’s data manipulated without the partition constraint, and then reattached. Partition child tables themselves can be partitioned.

Moving data around (“resharding”) can be done with regular SQL statements (insert, delete, copy etc.). Partition-local indexes and triggers can be created.

Adding redundancy to your shards is easily achieved with logical or streaming replication.

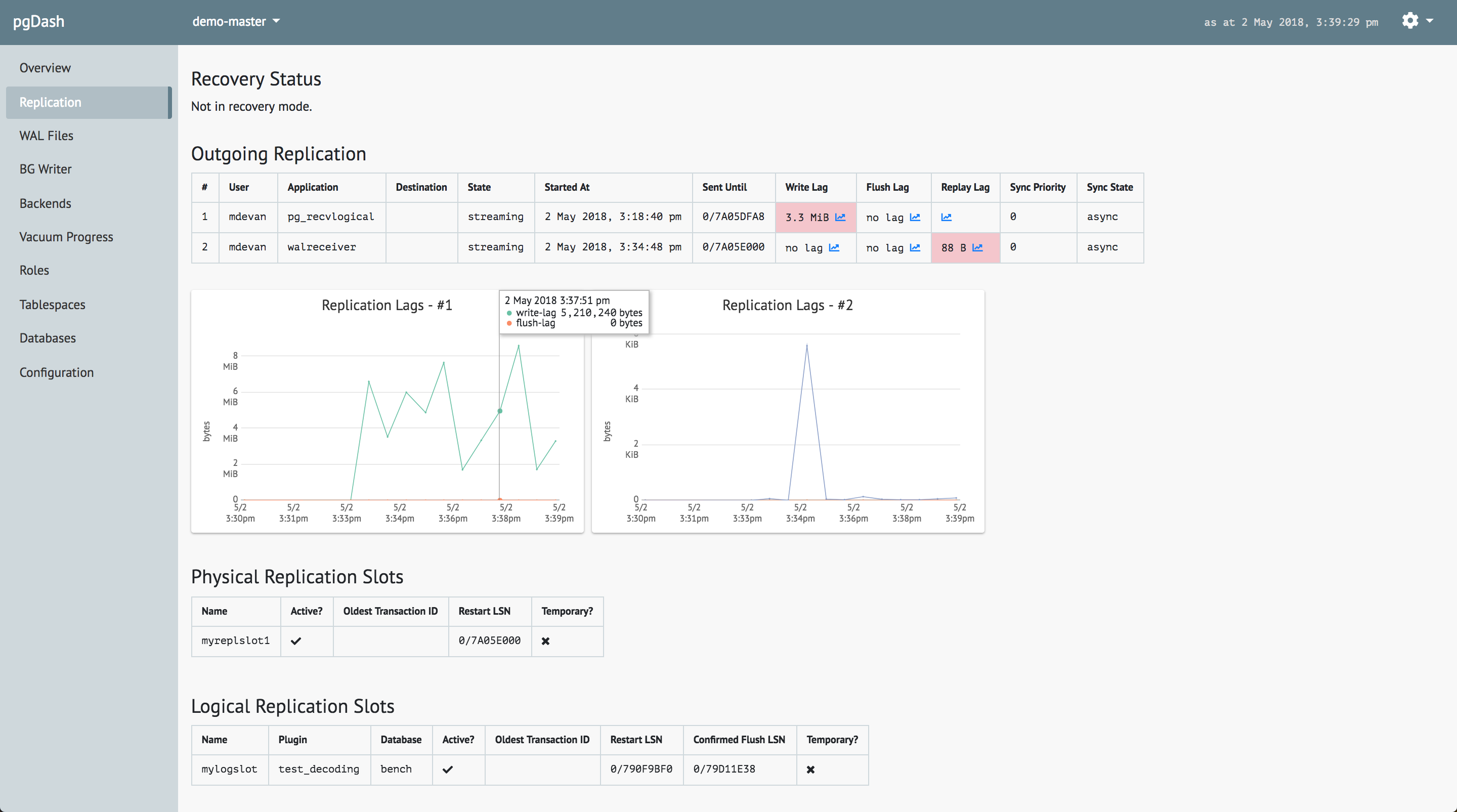

About pgDash

pgDash is an in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics.

pgDash provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, alerting and more.