PostgreSQL comes with the ability to do incremental backups and point-in-time recovery out of the box. Read on to learn more about the settings and procedures to achieve this.

It Starts With WAL Files

WAL stands for Write Ahead Log. WALs are used in nearly all modern RDBMS systems to provide durable and atomic transactions.

Changes to the data contained in a PostgreSQL database cluster managed by a single PostgreSQL server process is possible only via transactions. The modifications made to the data by transactions are recorded as an ordered sequence of WAL records. These records are written into fixed-length files called WAL segment files, or simply WAL files.

WAL files live in $PGDATA/pg_wal, where $PGDATA is the data directory for

the database cluster. On a default Debian installation for example, the WAL file

directory for the main cluster is /var/lib/postgresql/10/main/pg_wal. Here is

how it looks like:

# pwd

/var/lib/postgresql/10/main/pg_wal

# ls -l

total 278532

-rw------- 1 postgres postgres 16777216 May 7 08:48 00000001000000000000000B

-rw------- 1 postgres postgres 16777216 May 7 10:08 00000001000000000000000C

-rw------- 1 postgres postgres 16777216 May 7 10:08 00000001000000000000000D

-rw------- 1 postgres postgres 16777216 May 7 10:08 00000001000000000000000E

-rw------- 1 postgres postgres 16777216 May 7 10:08 00000001000000000000000F

-rw------- 1 postgres postgres 16777216 May 7 10:08 000000010000000000000010

-rw------- 1 postgres postgres 16777216 May 7 10:08 000000010000000000000011

-rw------- 1 postgres postgres 16777216 May 7 10:08 000000010000000000000012

-rw------- 1 postgres postgres 16777216 May 7 10:08 000000010000000000000013

-rw------- 1 postgres postgres 16777216 May 7 10:08 000000010000000000000014

-rw------- 1 postgres postgres 16777216 May 7 10:08 000000010000000000000015

-rw------- 1 postgres postgres 16777216 May 7 10:08 000000010000000000000016

-rw------- 1 postgres postgres 16777216 May 7 10:08 000000010000000000000017

-rw------- 1 postgres postgres 16777216 May 16 20:52 000000010000000000000018

-rw------- 1 postgres postgres 16777216 May 16 20:56 000000010000000000000019

-rw------- 1 postgres postgres 16777216 May 26 08:52 00000001000000000000001A

-rw------- 1 postgres postgres 16777216 Jun 2 09:59 00000001000000000000001B

drwx------ 2 postgres postgres 4096 Mar 30 10:06 archive_statusWAL files are generated incrementally, in sequence, starting from cluster creation. They keep getting generated for as long as modifications happen to the cluster. The WAL file mechanism is essential to the working of PostgreSQL, and cannot be turned off.

After the changes are first written out as WAL records, they have to be applied to the on-disk representation of the data itself. This process is called checkpointing, and happens in the background automatically (it can also be forced manually). The point until which checkpointing was done is called the REDO point. Checkpointing is also an essential part of Postgres architecture and cannot be turned off.

WAL File Retention

In normal course of PostgreSQL server operation, WAL files will keep getting

written into the pg_wal directory. But why have them around?

One reason is crash recovery. If the PostgreSQL server crashes and restarts, it starts applying changes from WAL records into the data files (checkpointing) since the last REDO point. This guarantees that the data files are consistent with the last completed transaction.

Another reason is related to streaming replication. Streaming replication works by sending WAL records over to standby servers, which store these locally and perform checkpoints. Standbys can lag behind the server they are replicating from (called the primary). For example, if the primary has generated 100 WAL records and the standby has received and applied the first 80, the most recent 20 are required to be available so that the standby can receive and apply from record 81 onwards.

But surely the very old WAL files can be deleted? Yes. PostgreSQL can be instructed to retain the most recent WAL files and delete the older ones. There are three relevant configuration options:

- wal_keep_segments - sets the minimum number of recentmost WAL files to be retained in the WAL file directory

- max_wal_size - specifies the maximum total size of WAL files in the WAL file

directory. If this is exceeded, older ones are deleted. However, there might

be reasons (including a high value for

wal_keep_segments) that can prevent this setting from being honored. - min_wal_size - specifies a minimum total size for WAL files. As long as the actual size stays below this value, no files will be deleted.

In real life it is not possible, or required, to store all previous WAL files

under the pg_wal directory.

WAL File Archival

The real value of WAL files is that they are a stream of changes that can be recorded and replayed to get a consistent replica of a PostgreSQL cluster. PostgreSQL provides a way by which we can copy out (or “archive”) each WAL file after it gets created – the archive_command configuration option.

This option specifies a shell command string that is invoked after each WAL file is created. Here are some examples:

# Copy the file to a safe location (like a mounted NFS volume)

archive_command = 'cp %p /mnt/nfs/%f'

# Not overwriting files is a good practice

archive_command = 'test ! -f /mnt/nfs/%f && cp %p /mnt/nfs/%f'

# Copy to S3 bucket

archive_command = 's3cmd put %p s3://BUCKET/path/%f'

# Copy to Google Cloud bucket

archive_command = 'gsutil cp %p gs://BUCKET/path/%f'

# An external script

archive_command = '/opt/scripts/archive_wal %p'There are 2 other options also, that must be set:

# this must be "on" to enable WAL archiving

archive_mode = on

# has to be "replica" (default) or "logical" for WAL archiving

wal_level = replicaWAL Compression

You can compress the WAL files before copying them into a long-term/safe storage location. However, there an option called wal_compression. Turning this on will cause PostgreSQL to compress the individual WAL records within the WAL files. The WAL files itself will be of the same size (typically 16 MB), but will contain a sequence of compressed records rather than plain records.

Continuous Archiving

WAL archiving is also called continuous archiving and is in effect, incremental backup.

Before starting this process of incremental backup, a full backup is required. This establishes a baseline upon which WAL files can be incrementally restored. A full backup can be taken either by:

- shutting down the Postgres server process and copying the cluster data directory (while preserving permissions), or

- using the

pg_basebackupon a running Postgres server.

Point-In-Time-Recovery (PITR)

PITR refers to PostgreSQL’s ability to start from the restore of a full backup, then progressively fetch and apply archived WAL files up to a specified timestamp.

To do this, we have to create a file called “recovery.conf” in the restored cluster data directory and start up a Postgres server for that data directory. The recovery.conf file contains the target timestamp, and looks like this:

restore_command = 'cp /tmp/demo/archive/%f "%p"'

recovery_target_time = '2019-06-04 14:10:00'The restore_command specifies how to fetch a WAL file required by PostgreSQL. It is the inverse of archive_command. The recovery_target_time specifies the time until when we need the changes.

When a PostgreSQL server process starts up and discovers a recovery.conf file in the data directory, it starts up in a special mode called “recovery mode”. When in recovery mode, client connections are refused. Postgres fetches WAL files and applies them until the recovery target (in this case, changes up to the specified timestamp) is achieved. When the target is achieved, the server by default pauses WAL replay (other actions are possible). At this point, you are supposed to examine the state of the restore and if everything looks ok, unpause to exit recovery mode and continue normal operation.

Putting It All Together

All that was a whole bunch of theory and text, let’s try it out to see how it all works in practice.

First let’s initialize a new cluster:

/tmp/demo$ pg_ctl -D clus1 initdb

The files belonging to this database system will be owned by user "postgres".

This user must also own the server process.

The database cluster will be initialized with locale "C.UTF-8".

The default database encoding has accordingly been set to "UTF8".

The default text search configuration will be set to "english".

Data page checksums are disabled.

creating directory clus1 ... ok

creating subdirectories ... ok

selecting default max_connections ... 100

selecting default shared_buffers ... 128MB

selecting dynamic shared memory implementation ... posix

creating configuration files ... ok

running bootstrap script ... ok

performing post-bootstrap initialization ... ok

syncing data to disk ... ok

WARNING: enabling "trust" authentication for local connections

You can change this by editing pg_hba.conf or using the option -A, or

--auth-local and --auth-host, the next time you run initdb.

Success. You can now start the database server using:

/usr/lib/postgresql/10/bin/pg_ctl -D clus1 -l logfile startWe’ll also create a directory that will serve as our safe storage location. Let’s call this “archive”.

/tmp/demo$ mkdir archive

/tmp/demo$ ls -l

total 8

drwxr-xr-x 2 postgres postgres 4096 Jun 4 14:02 archive

drwx------ 19 postgres postgres 4096 Jun 4 14:02 clus1We need to configure the archive settings we discussed earlier, before we can

start the server. So let’s add the following to the end of

clus1/postgres.conf:

port = 6000

wal_level = logical

archive_mode = on

archive_command = 'cp %p /tmp/demo/archive/%f'

archive_timeout = 60Our archive command simply copies the WAL file to the archive directory we created earlier.

We have also added the archive_timeout setting. Usually, a WAL file is created only when there are enough WAL records to fill a 16 MB WAL file. This means that for servers with few writes, you may have to wait a long time for a WAL file to be created. The setting archive_timeout tells Postgres that it must create a WAL file every so many seconds, irrespective of whether it is full or not.

Here we have set this to 60 (seconds), but this is only for the demo! You’d typically never want to keep it this low.

Let’s also make a copy of “clus1”. This is the equivalent of a full backup.

/tmp/demo$ cp -Rp clus1 clus2

/tmp/demo$ ls -l

total 12

drwxr-xr-x 2 postgres postgres 4096 Jun 4 14:02 archive

drwx------ 19 postgres postgres 4096 Jun 4 14:03 clus1

drwx------ 19 postgres postgres 4096 Jun 4 14:03 clus2Now we can start the cluster:

/tmp/demo$ pg_ctl -D clus1 -l log1 start

waiting for server to start.... done

server startedLet’s add in some data.

/tmp/demo$ psql -h /var/run/postgresql -p 6000 postgres

psql (10.8 (Ubuntu 10.8-0ubuntu0.18.04.1))

Type "help" for help.

postgres=# create database demo;

CREATE DATABASE

postgres=# \c demo

You are now connected to database "demo" as user "postgres".

demo=# create table tbl1 (col1 int);

CREATE TABLE

demo=# insert into tbl1 (col1) select generate_series(1, 10000);

INSERT 0 10000

demo=# select count(*) from tbl1;

count

-------

10000

(1 row)

demo=# select now();

now

-------------------------------

2019-06-04 14:05:05.657871+00

(1 row)

demo=# \qNote that the time is now 14:05. Let’s check if our archive command is working:

/tmp/demo$ ls -l archive/

total 16384

-rw------- 1 postgres postgres 16777216 Jun 4 14:04 000000010000000000000001Yes, we have one single archive file. Our last change was at 14:05, let’s now wait for a few minutes and then make some more changes.

/tmp/demo$ psql -h /var/run/postgresql -p 6000 demo

psql (10.8 (Ubuntu 10.8-0ubuntu0.18.04.1))

Type "help" for help.

demo=# select now();

now

-------------------------------

2019-06-04 14:16:06.093859+00

(1 row)

demo=# select count(*) from tbl1;

count

-------

10000

(1 row)

demo=# insert into tbl1 (col1) select generate_series(1, 100);

INSERT 0 100

demo=# select count(*) from tbl1;

count

-------

10100

(1 row)

demo=# \qSo now we have added 100 more rows, at 14:16. Let’s stop the server:

/tmp/demo$ pg_ctl -D clus1 stop

waiting for server to shut down.... done

server stopped

/tmp/demo$and check our archive again:

/tmp/demo$ ls -l archive/

total 65536

-rw------- 1 postgres postgres 16777216 Jun 4 14:04 000000010000000000000001

-rw------- 1 postgres postgres 16777216 Jun 4 14:05 000000010000000000000002

-rw------- 1 postgres postgres 16777216 Jun 4 14:09 000000010000000000000003

-rw------- 1 postgres postgres 16777216 Jun 4 14:16 000000010000000000000004Looks good. Now we’ll attempt to do a PITR recovery of clus2 up to the time 14:10.

First let’s edit clus2’s postgres.conf and add these lines at the end:

port = 6001

archive_mode = offInorder to replay the WAL files, we have to put the PostgreSQL server for clus2 (which we haven’t started yet) into recovery mode. To do this, create the file called “recovery.conf” in clus2:

/tmp/demo$ cat clus2/recovery.conf

restore_command = 'cp /tmp/demo/archive/%f "%p"'

recovery_target_time = '2019-06-04 14:10:00'This contains the restore_command which does the opposite of the earlier archive_command, namely copying the requested file from the archive directory to the pg_wal directory.

We’ve also set the recovery_target_time to 14:10.

Now we start clus2:

/tmp/demo$ pg_ctl -D clus2 -l log2 start

waiting for server to start.... done

server startedTo see what happened, let’s examine the log file:

/tmp/demo$ cat log2

2019-06-04 14:19:10.862 UTC [10513] LOG: listening on IPv4 address "127.0.0.1", port 6001

2019-06-04 14:19:10.864 UTC [10513] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.6001"

2019-06-04 14:19:10.883 UTC [10514] LOG: database system was shut down at 2019-06-04 14:02:31 UTC

2019-06-04 14:19:10.883 UTC [10514] LOG: starting point-in-time recovery to 2019-06-04 14:10:00+00

2019-06-04 14:19:10.903 UTC [10514] LOG: restored log file "000000010000000000000001" from archive

2019-06-04 14:19:10.930 UTC [10514] LOG: consistent recovery state reached at 0/16383E8

2019-06-04 14:19:10.930 UTC [10514] LOG: redo starts at 0/16383E8

2019-06-04 14:19:10.931 UTC [10513] LOG: database system is ready to accept read only connections

2019-06-04 14:19:11.037 UTC [10514] LOG: restored log file "000000010000000000000002" from archive

2019-06-04 14:19:11.079 UTC [10514] LOG: restored log file "000000010000000000000003" from archive

2019-06-04 14:19:11.122 UTC [10514] LOG: restored log file "000000010000000000000004" from archive

2019-06-04 14:19:11.141 UTC [10514] LOG: recovery stopping before commit of transaction 559, time 2019-06-04 14:16:24.875517+00

2019-06-04 14:19:11.141 UTC [10514] LOG: recovery has paused

2019-06-04 14:19:11.141 UTC [10514] HINT: Execute pg_wal_replay_resume() to continue.The recovery was quick (in real life, it may take hours or days) and the log states that it has stopped before a particular transaction (that has a timestamp of > 14:10). It also says that the recovery is paused and must be manually continued.

Let’s examine the data:

/tmp/demo$ psql -h /var/run/postgresql -p 6001 demo

psql (10.8 (Ubuntu 10.8-0ubuntu0.18.04.1))

Type "help" for help.

demo=# select count(*) from tbl1;

count

-------

10000

(1 row)We see that there are only 10000 rows. At 14:16, we’d added 100 more, which haven’t appeared in the table.

This looks good, so let’s resume:

demo=# select pg_wal_replay_resume();

pg_wal_replay_resume

----------------------

(1 row)The log file now reports that the recovery is complete and normal operations are restored:

2019-06-04 14:20:26.219 UTC [10514] LOG: redo done at 0/4002160

2019-06-04 14:20:26.219 UTC [10514] LOG: last completed transaction was at log time 2019-06-04 14:05:28.813325+00

cp: cannot stat '/tmp/demo/archive/00000002.history': No such file or directory

2019-06-04 14:20:26.228 UTC [10514] LOG: selected new timeline ID: 2

2019-06-04 14:20:26.272 UTC [10514] LOG: archive recovery complete

cp: cannot stat '/tmp/demo/archive/00000001.history': No such file or directory

2019-06-04 14:20:26.388 UTC [10513] LOG: database system is ready to accept connectionsAnd we have successfully recovered the cluster up until a specified time!

Further Reading

Here are a few starting points to discover more about WAL archiving, recovery mode and PITR:

- Docs: Recovery Configuration

- Docs: Continuous Archiving and PITR

- Chapter 9 from “The Internals of PostgreSQL” book

- Tools: WAL-E, WAL-G, Barman

About pgDash

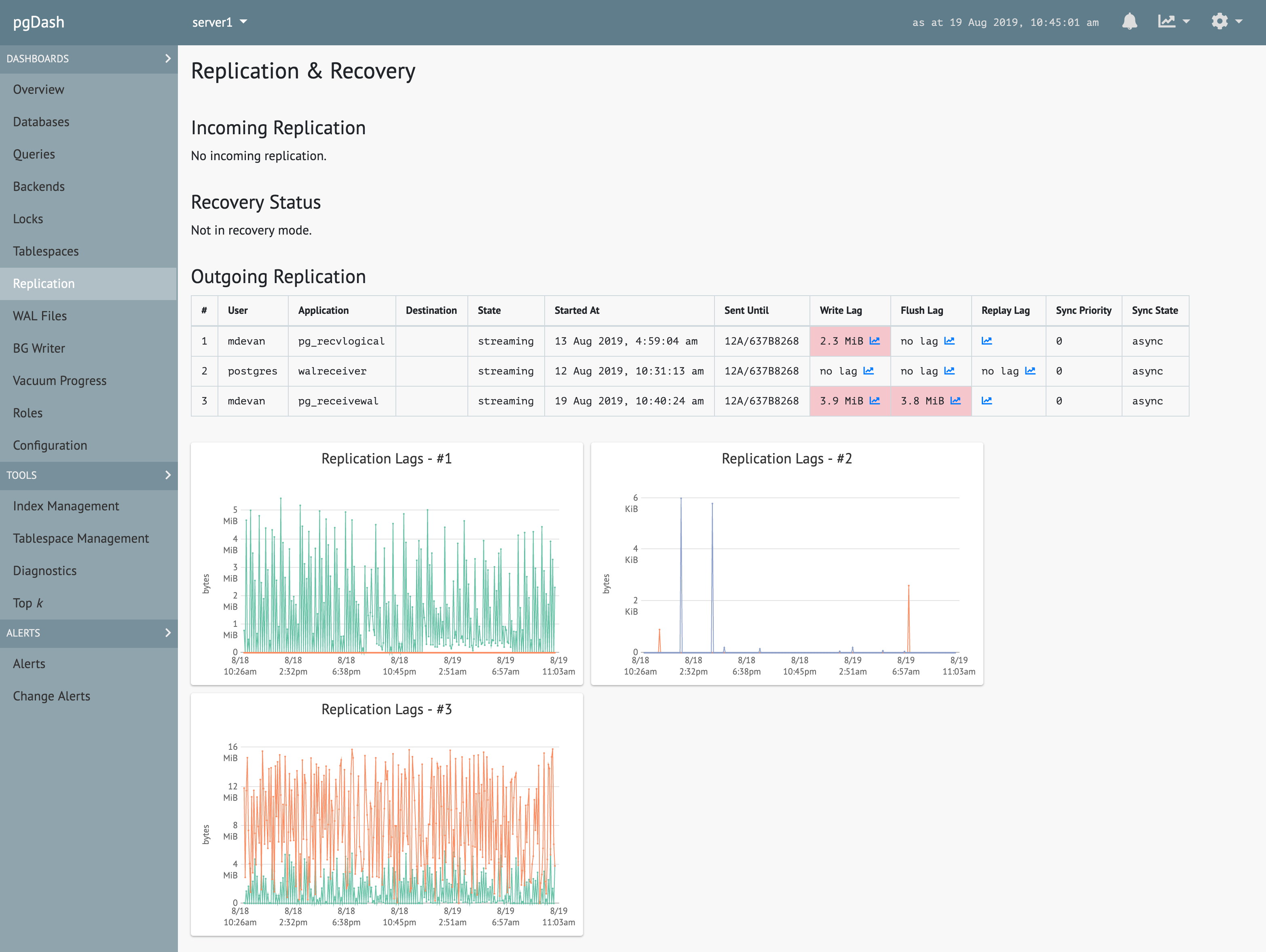

pgDash is a modern, in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics. pgDash provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, alerting, teams and more.

pgDash can monitor replication, locks, queries and more. It can also provide quick diagnostics and extensive alerts. Learn more here or signup today for a free trial.