PostgreSQL 10 came with the welcome addition of the logical replication feature. This provides a more flexible and easier means to replicate your tables than the regular streaming replication mechanism. However, it does have some limitations that may or may not prevent you from employing it for replication. Read on to learn more.

What Is Logical Replication Anyway?

Streaming Replication

Prior to v10, the only way to replicate data residing in a server was to replicate the changes at WAL-level. During it’s operation, a PostgreSQL server (the primary) generates a sequence of WAL files. The basic idea is to get these files over to another PostgreSQL server (the standby) which takes in these files and “replays” them to recreate the same changes happening at the primary server. The standby server stays in a read-only mode called the recovery mode, and any changes to the standby server are not permitted (that is, only read-only transactions are allowed).

The process of shipping the WAL files from the primary to standby is called log

shipping, and can be done manually (scripts to rsync changes from primary’s

$PGDATA/pg_wal directory to secondary’s) or through streaming replication.

Various features like replication slots, standby feedback and failover

were added over time to improve the reliability and usefulness of streaming

replication.

One big “feature” of streaming replication is that it’s all or nothing. All changes to all objects from all databases on the primary have to be shipped to the standby, and the standby has to import every change. It is not possible to selectively replicate a part of your database.

Logical Replication

Logical Replication, added in v10, makes it possible to do just that –

replicate only a set of tables to other servers. It is best

explained with an example. Let’s take a database called src in a server, and

create a table in it:

src=> CREATE TABLE t (col1 int, col2 int);

CREATE TABLE

src=> INSERT INTO t VALUES (1,10), (2,20), (3,30);

INSERT 0 3We’re also going to create a publication in this database (note that you need to have superuser privileges to do this):

src=# CREATE PUBLICATION mypub FOR ALL TABLES;

CREATE PUBLICATIONNow let’s goto a database dst on another server and create a similar table:

dst=# CREATE TABLE t (col1 int, col2 int, col3 text NOT NULL DEFAULT 'foo');

CREATE TABLEAnd we now setup a subscription here that will connect to the publication on

the source and start pulling in the changes. (Note that you need to have a user

repuser at the source server with replication privileges and read access to

the tables.)

dst=# CREATE SUBSCRIPTION mysub CONNECTION 'user=repuser password=reppass host=127.0.0.1 port=5432 dbname=src' PUBLICATION mypub;

NOTICE: created replication slot "mysub" on publisher

CREATE SUBSCRIPTIONThe changes are synced, and you can see the rows at the destination side:

dst=# SELECT * FROM t;

col1 | col2 | col3

------+------+------

1 | 10 | foo

2 | 20 | foo

3 | 30 | foo

(3 rows)The destination table has an extra column “col3”, which is not touched by the replication. The changes are replicated “logically” – so, as long as it possible to insert a row with t.col1 and t.col2 alone, the replication process will do so.

Compared to streaming replication, the logical replication feature is perfect for replicating, say, a single schema or a set of tables in a specific database to another server.

Replication of Schema Changes

Assume you have a Django application with it’s set of tables living in the source database. It’s easy and efficient to setup logical replication to bring over all these tables into another server, where you can run reporting, analytics, batch jobs, developer/customer support apps and the like without touching the “real” data and without affecting the production app.

Possibly the biggest limitation of Logical Replication currently is that it does not replicate schema changes – any DDL command executed at the source database does not cause a similar change in the destination database, unlike in streaming replication. For example, if we do this at the source database:

src=# ALTER TABLE t ADD newcol int;

ALTER TABLE

src=# INSERT INTO t VALUES (-1, -10, -100);

INSERT 0 1this gets logged in the destination log file:

ERROR: logical replication target relation "public.t" is missing some replicated columnsand the replication stops. The column has to be added “manually” at the destination, at which point the replication resumes:

dst=# SELECT * FROM t;

col1 | col2 | col3

------+------+------

1 | 10 | foo

2 | 20 | foo

3 | 30 | foo

(3 rows)

dst=# ALTER TABLE t ADD newcol int;

ALTER TABLE

dst=# SELECT * FROM t;

col1 | col2 | col3 | newcol

------+------+------+--------

1 | 10 | foo |

2 | 20 | foo |

3 | 30 | foo |

-1 | -10 | foo | -100

(4 rows)This means that if your Django application has added a new feature that needs

new columns or tables, and you’ve to run django-admin migrate on the source

database, the replication setup breaks.

Workaround

Your best bet to fix this issue would be to pause the subscription on the destination, migrate the destination first, then the source and then resume the subscription. You can pause and resume subscriptions like this:

-- pause replication (destination side)

ALTER SUBSCRIPTION mysub DISABLE;

-- resume replication

ALTER SUBSCRIPTION mysub ENABLE;If new tables are added and your publication is not “FOR ALL TABLES”, you’ll need to add them to the publication manually:

ALTER PUBLICATION mypub ADD TABLE newly_added_table;You’ll also need to “refresh” the subscription on the destination side to tell Postgres to start syncing the new tables:

dst=# ALTER SUBSCRIPTION mysub REFRESH PUBLICATION;

ALTER SUBSCRIPTIONSequences

Consider this table at the source, having a sequence:

src=# CREATE TABLE s (a serial PRIMARY KEY, b text);

CREATE TABLE

src=# INSERT INTO s (b) VALUES ('foo'), ('bar'), ('baz');

INSERT 0 3

src=# SELECT * FROM s;

a | b

---+-----

1 | foo

2 | bar

3 | baz

(3 rows)

src=# SELECT currval('s_a_seq'), nextval('s_a_seq');

currval | nextval

---------+---------

3 | 4

(1 row)The sequence s_a_seq was created to back the a column, of serial type.

This generates the autoincrementing values for s.a. Now let’s replicate this

into dst, and insert another row:

dst=# SELECT * FROM s;

a | b

---+-----

1 | foo

2 | bar

3 | baz

(3 rows)

dst=# INSERT INTO s (b) VALUES ('foobaz');

ERROR: duplicate key value violates unique constraint "s_pkey"

DETAIL: Key (a)=(1) already exists.

dst=# SELECT currval('s_a_seq'), nextval('s_a_seq');

currval | nextval

---------+---------

1 | 2

(1 row)Oops, what just happened? The destination tried to start the sequence from

scratch and generated a value of 1 for a. This is because logical replication

does not replicate the values for sequences since the next value of the

sequence is not stored in the table itself.

Workaround

If you think about it logically, you can’t modify the same “autoincrement” value from two places without bidirectional synchronization. If you really need an incrementing number in each row of a table, and need to insert into that table from multiple servers, you could:

- use an external source for the number, like ZooKeeper or etcd,

- use non-overlapping ranges – for example, the first server generates and inserts numbers in the range 1 to 1 million, the second one in the range 1 million to 2 million, and so on.

Tables Without Unique Rows

Let’s try creating a table without a primary key, and replicating it:

src=# CREATE TABLE nopk (foo text);

CREATE TABLE

src=# INSERT INTO nopk VALUES ('new york');

INSERT 0 1

src=# INSERT INTO nopk VALUES ('boston');

INSERT 0 1And the rows are now on the destination too:

dst=# SELECT * FROM nopk;

foo

----------

new york

boston

(2 rows)Now let’s try deleting the second row at the source:

src=# DELETE FROM nopk WHERE foo='boston';

ERROR: cannot delete from table "nopk" because it does not have a replica identity and publishes deletes

HINT: To enable deleting from the table, set REPLICA IDENTITY using ALTER TABLE.This happens because the destination won’t be able to uniquely identify the row that needs to be deleted (or updated) without a primary key.

Workaround

You can of course, alter the schema to include a primary key. In case you don’t

want to do that, you ALTER TABLE and set the “replica identify” to the full

row or a unique index. For example:

src=# ALTER TABLE nopk REPLICA IDENTITY FULL;

ALTER TABLE

src=# DELETE FROM nopk WHERE foo='boston';

DELETE 1The delete now succeeds, and the replication too:

dst=# SELECT * FROM nopk;

foo

----------

new york

(1 row)If your table really has no way to uniquely identify rows, then you’re a bit stuck. See the REPLICA IDENTITY section of ALTER TABLE for more information.

Differently Partitioned Destinations

Wouldn’t it be nice to have a source that is partitioned one way and a destination in a different way? For example, at the source we can keep parititions for each month, and at the destination for each year. Presumably the destination is a bigger machine, and we need to keep historical data, but need that data rarely.

Let’s create a monthly-partitioned table at the source:

src=# CREATE TABLE measurement (

src(# logdate date not null,

src(# peaktemp int

src(# ) PARTITION BY RANGE (logdate);

CREATE TABLE

src=#

src=# CREATE TABLE measurement_y2019m01 PARTITION OF measurement

src-# FOR VALUES FROM ('2019-01-01') TO ('2019-02-01');

CREATE TABLE

src=#

src=# CREATE TABLE measurement_y2019m02 PARTITION OF measurement

src-# FOR VALUES FROM ('2019-02-01') TO ('2019-03-01');

CREATE TABLE

src=#

src=# GRANT SELECT ON measurement, measurement_y2019m01, measurement_y2019m02 TO repuser;

GRANTAnd try creating a yearly-partitioned table at the destination:

dst=# CREATE TABLE measurement (

dst(# logdate date not null,

dst(# peaktemp int

dst(# ) PARTITION BY RANGE (logdate);

CREATE TABLE

dst=#

dst=# CREATE TABLE measurement_y2018 PARTITION OF measurement

dst-# FOR VALUES FROM ('2018-01-01') TO ('2019-01-01');

CREATE TABLE

dst=#

dst=# CREATE TABLE measurement_y2019 PARTITION OF measurement

dst-# FOR VALUES FROM ('2019-01-01') TO ('2020-01-01');

CREATE TABLE

dst=#

dst=# ALTER SUBSCRIPTION mysub REFRESH PUBLICATION;

ERROR: relation "public.measurement_y2019m01" does not exist

dst=#Postgres complains that it needs the partition table for Jan 2019, which we have no intention of creating on the destination.

This happens because logical replication works not at the base table level, but at the child table level. There is no real workaround for this – if you’re using partitions, the partition hierarchy must be the same on both sides of a logical replication setup.

Large Objects

Large objects cannot be replicated using logical replication. This is probably not a big deal nowadays, as storing large objects is not a common modern-day practice. It is also easier to store a reference to a large object on some external, redudant storage (like NFS, S3 etc.) and replicate that reference rather than storing and replicating the object itself.

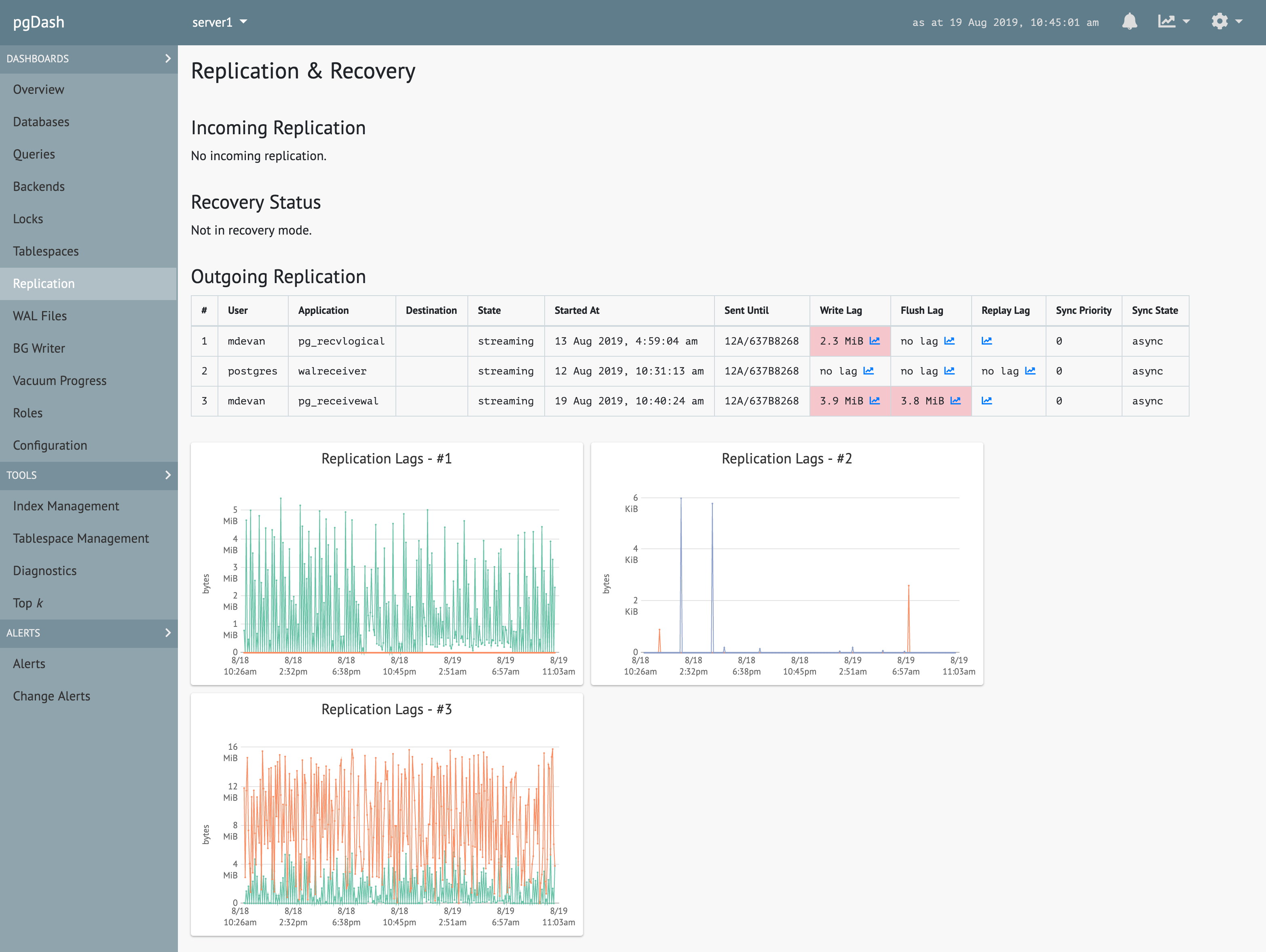

About pgDash

pgDash is a modern, in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics. pgDash provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, alerting, teams and more.

pgDash can monitor replication, locks, queries and more. It can also provide quick diagnostics and extensive alerts. Learn more here or signup today for a free trial.