With a Retrieval-Augmented Generation (RAG) system, you can create an AI assistant that can answer questions based on the information contained within your existing, in-house knowledge bases like wikis, manuals, training and reference material.

Read on to see how you can build your own RAG using PostgreSQL, pgvector, ollama and less than 200 lines of Go code.

Overview

We will use a few paragraphs from a story as our “document corpus”. For each document, we’ll generate an embedding of the document using Meta’s open source LLM Llama3, hosted locally using ollama. We will then store the document and it’s embedding into a PostgreSQL table. The embedding will be stored and accessed using the pgvector extension.

For querying, we’ll retrieve a single document from our table most relevant to the user’s query, and use llama3 again to generate a response.

ollama provides OpenAI-like HTTP APIs which we will use to generate embeddings and chat responses.

For the Go code, we’ll use jackc/pgx and pgvector-go to talk to Postgres, and the ollama client-side API package to make the HTTP API calls.

Running Models Using Ollama

Ollama is a recently released tool that allows you to run popular open source models (see list) locally on your machine. It provides a model-agnostic OpenAI-style REST API that can be used from client applications. See the docs for installing and running ollama.

Once ollama is installed on the machine, we can run the llama3 model in ollama simply with:

$ ollama pull llama3

pulling manifest

pulling 00e1317cbf74... 100% ▕████████████████████████████████████████████████████████▏ 4.7 GB

pulling 4fa551d4f938... 100% ▕████████████████████████████████████████████████████████▏ 12 KB

pulling 8ab4849b038c... 100% ▕████████████████████████████████████████████████████████▏ 254 B

pulling 577073ffcc6c... 100% ▕████████████████████████████████████████████████████████▏ 110 B

pulling ad1518640c43... 100% ▕████████████████████████████████████████████████████████▏ 483 B

verifying sha256 digest

writing manifest

removing any unused layers

success

Note that ollama’s HTTP server listens on 127.0.0.1:11434 by default. If

you’re running ollama on one machine and accessing it from another, see the

docs.

Test it with curl:

$ curl -X POST http://192.168.0.107:11434/api/embeddings -d '{

"model": "llama3",

"prompt":"Researchers have found that cats display behavioral patterns and social traits that were previously thought to be unique to humans and primates."

}'

{"embedding":[-4.317752838134766,-1.9526829719543457,-2.889270305633545,0.6311468482017517,1.636886477470398,1.2741944789886475,-3.402531147003174,-1.3292253017425537,

[...snip...]

Installing pgvector

pgvector is a Postgres extension available for Postgres versions 12 to 16. If you are using the pgdg APT repository, you can install it with:

sudo apt install postgresql-16-pgvector

Restart of the Postgres server is not required. pgvector is available on other systems also (docker, yum, many hosting providers, etc) – see the installation notes for more info.

Once installed, we can set up the extension for a database:

postgres=# \c ragdemo

You are now connected to database "ragdemo" as user "postgres".

ragdemo=# create extension vector;

CREATE EXTENSION

Let’s also create a table to store the document and it’s embedding:

ragdemo=# create table items (id serial primary key, doc text, embedding vector(4096));

CREATE TABLE

The type vector is provided by the pgvector extension. The (4096) indicates

that we want to store vectors of dimension 4096. Each value of type vector

(4096) is basically an array of 4096 4-byte floating point numbers.

The Documents To Store

We’ll use 4 paragraphs from Arthur Conan Doyle’s Sherlock Holmes story The Boscombe Valley Mystery. The text is taken from Project Gutenberg etext #1661, in public domain. You can read the full story here. The individual paragraphs, as separate files, are archived alongside the code in the demo code git repo.

The Code

The code is available in a git repo on GitHub here, licensed under MIT license. To compile and run it yourself, first ensure you have a working Go installation, then:

git clone https://github.com/rapidloop/ragdemo.git

cd ragdemo

go build

Connecting to Postgres

The code uses the popular jackc/pgx driver to connect to a Postgres database specified using the environment variable DATABASE_URL. The format is same as what can be used with psql. Other standard Postgres environment variables like PGHOST, PGDATABASE etc are also understood. Some DATABASE_URL examples:

export DATABASE_URL=postgres:///ragdemo?host=/var/run/postgresql

export DATABASE_URL=postgres://myuser@myhost:6432/ragdemo

export PGPASSWORD=mysecretpass

It executes the following SQL to insert a document and it’s embedding:

INSERT INTO items (doc, embedding) VALUES ($1, $2)

and the following to get the most relevant document:

SELECT doc FROM items ORDER BY embedding <-> $1 LIMIT 1

The <-> operator between two values of type vector(1024) is provided by the pgvector extension. This operator returns the L2 distance between the two vectors. For other operators and more information, see the pgvector docs.

Talking to the Ollama APIs

Ollama provides simple HTTP REST APIs that are similar to OpenAI’s HTTP APIs. Ollama is itself written in Go, and provides a simple wrapper package to provide typed access to these APIs.

A client is created with the following code:

cli, err := api.ClientFromEnvironment()

The environment variable OLLAMA_HOST can be used client-side to provide the URL of the server in case it is not running on the same machine:

export OLLAMA_HOST=192.168.0.107:11434

Here is a request to generate an embedding using the model llama3:

req := &api.EmbeddingRequest{

Model: "llama3",

Prompt: doc,

}

resp, err := cli.Embeddings(context.Background(), req)

// resp.Embedding is a []float64 with 4096 entries

The embedding is converted from a []float64 to a []float32 and subsequently to a pgvector before using the pgx APIs to store it in the database.

To create a chat response, without streaming, the following code is used:

stream := false

req2 := &api.ChatRequest{

Model: "llama3",

Stream: &stream,

Messages: []api.Message{

{

Role: "user",

Content: fmt.Sprintf(`Using the given reference text, succinctly answer the question that follows:

reference text is:

%s

end of reference text. The question is:

%s

`, doc, prompt),

},

},

}

var response string

resp2fn := func(resp2 api.ChatResponse) error {

response = strings.TrimSpace(resp2.Message.Content)

return nil

}

err = cli.Chat(context.Background(), req2, resp2fn)

The actual chat response is in the variable response. This code also shows the prompt used for the generation step.

The Command-line interface

The code uses a couple of flag-s to allow the user to either:

- insert the document that is stored as file into the database, or

- use the given prompt to retrieve and generate a response

This is the command line usage:

$ ./ragdemo

Usage:

./ragdemo -insert {path-to-doc-file}

./ragdemo -query {query-text}

Environment variables:

DATABASE_URL url of database, like postgres://host/dbname

OLLAMA_HOST url or host:port of OLLAMA server

PG* standard postgres env. vars are understood

Putting It All Together

Storing a document

When invoked with -insert and the path to a document, ragdemo reads the

contents of the file, talks to the Llama3 model over Ollama’s HTTP API and

retrieves an embedding for the document. It then stores the document and its

embedding into the items table using the pgx APIs.

Note that nothing is output on success:

$ ./ragdemo -insert doc1

$ ./ragdemo -insert doc2

$ ./ragdemo -insert doc3

$ ./ragdemo -insert doc4

Querying the RAG system

When invoked with -query followed by a user prompt, typically a question or a

few keywords, ragdemo first generates an embedding for this prompt too. It

then compares this embedding to all other embeddings in the items table and

finds the “closest” one.

The “closest” one is determined using the “L2 distance operator” <-> provided

by pgvector. The one with the least value for distance is the “closest”, and

therefore the most relevant one to answer the user prompt with. ragdemo

retrieves only the single most relevant document and uses it for generation.

In the last step (generation), ragdemo will use the retrieved document and

call Llama3 again to create a chat response using the prompt shown in the code

above. The prompt contains the original prompt, augmented with the retrieved

document. The response is then printed out, to finally see some magic:

$ ./ragdemo -query "What was the verdict of McCarthy's inquest?"

The verdict of McCarthy's inquest was "wilful murder".

$ ./ragdemo -query "What did the gamekeeper see?"

According to the reference text, William Crowder, the game-keeper, lost sight of Mr. McCarthy and his son after a certain time. He didn't actually see anything significant in this case.

$ ./ragdemo -query "Did anyone see the McCarthys quarrelling?"

Yes, Patience Moran, a 14-year-old girl who was picking flowers in the woods, saw Mr. McCarthy and his son having a violent quarrel at the border of the wood and close to the lake. She heard Mr. McCarthy using strong language and saw the son raise his hand as if to strike his father.

$ ./ragdemo -query "So who killed McCarthy?"

According to the reference text, young Mr. McCarthy was arrested and a verdict of "wilful murder" was returned at the inquest. He was brought before the magistrates and the case was referred to the next Assizes. Therefore, based on the information provided, it appears that young Mr. McCarthy killed his father, Mr. McCarthy.

Next Steps

This was just a basic RAG demo using PostgreSQL, pgvector, ollama, Llama3 and Go. Here are some notes to take this further:

- There are models designed for generating embeddings, which are more efficient at that particular job than llama3. Check out this leaderboard as a starting point.

- If your documents are not in English, there might be better models.

- L2 distance may not be the optimal distance method for your chosen model. pgvector supports other methods too.

- Scanning the entire table and computing L2 distance against each embedding does not scale. Check out pgvector index support and other techniques.

- Retrieving and using more documents during generation, as well as including documents fetched using keyword matching or other search techniques can also enhance the relevance and quality of generated output.

- Tweaking of generation prompt and trying out LLMs with different capabilities for generation step, or even showing the user the outputs generated by multiple LLMs should also prove useful.

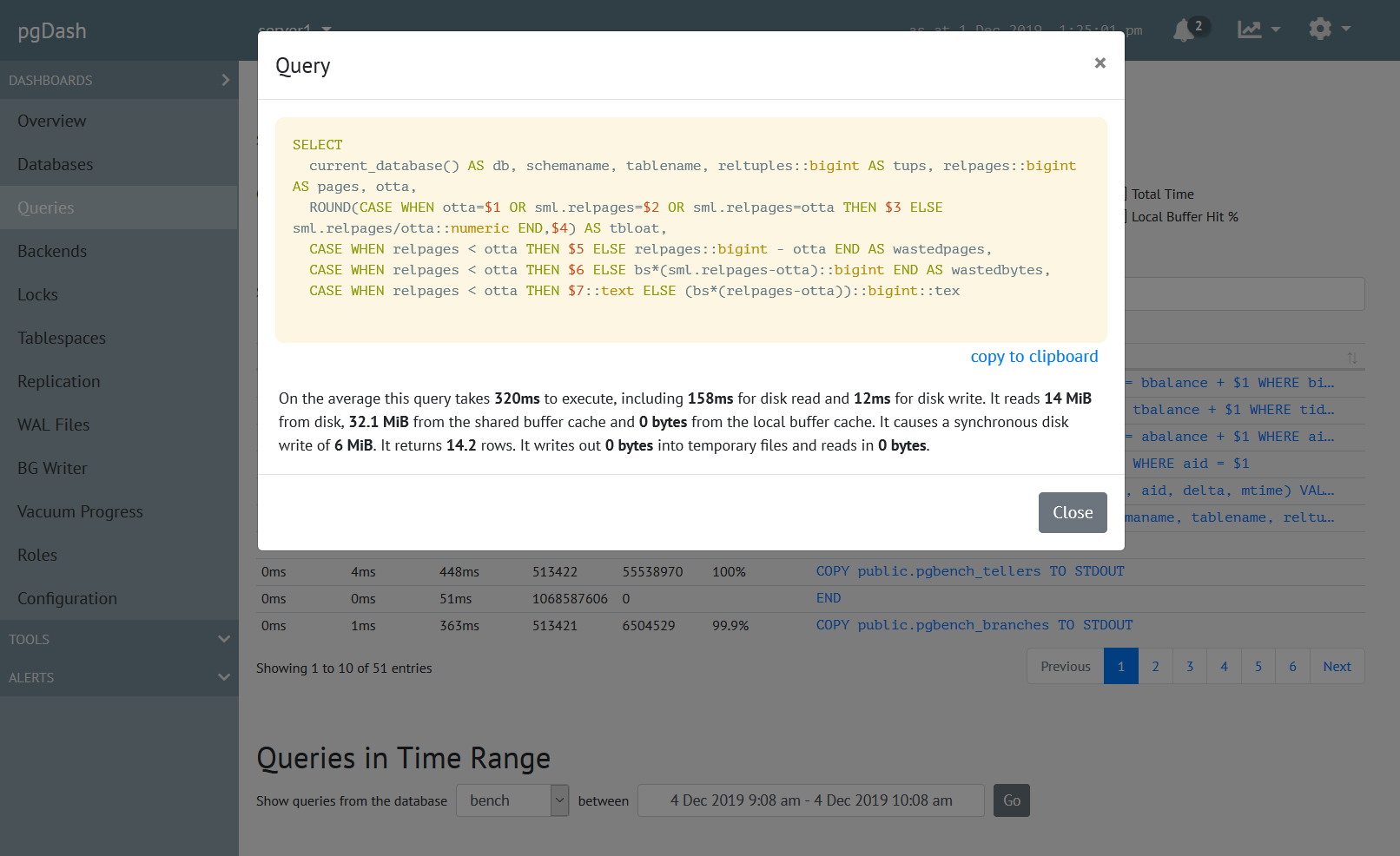

About pgDash

pgDash is a modern, in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics.

pgDash provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, diagnostics, alerting, teams and more. Checkout the features here or signup today for a free trial.