In every deployment, there are always a few queries that run too slow.

Read on to see how to discover queries that take too long to execute, and how to figure out why they are slow.

Just Use pg_stat_statements?

pg_stat_statements is a popular extension that is included in the core PostgreSQL distribution and available by default on nearly all DBaaS providers. It is invaluable, and is more or less the only way to get statistics on queries without installing custom extensions.

It has however, a couple of limitations when it comes to discovering slow queries.

Cumulative Statistics

The pg_stat_statements extension provides cumulative statistics about every query ever executed by the server. For each query, it shows, among other metrics, the total number of times it has been executed, and the total time taken across all executions.

In order to “catch” slow queries when they happen, you need to periodically fetch the entire contents of the pg_stat_statements view, store it in a timeseries database, and compare the execution counts. For example, if have the contents of pg_stat_statements at 10.00 AM and 10.10 AM, you can select those queries which have a higher execution count at 10.10 AM than at 10.00 AM. For these queries, you can compute the average execution time during this interval, using:

(total time at 10.10 AM - total time at 10.00 AM) ÷ (total count at 10.10 AM - total count at 10.00 AM)

If this average execution time exceeds an upper threshold, you can trigger an alert to take action.

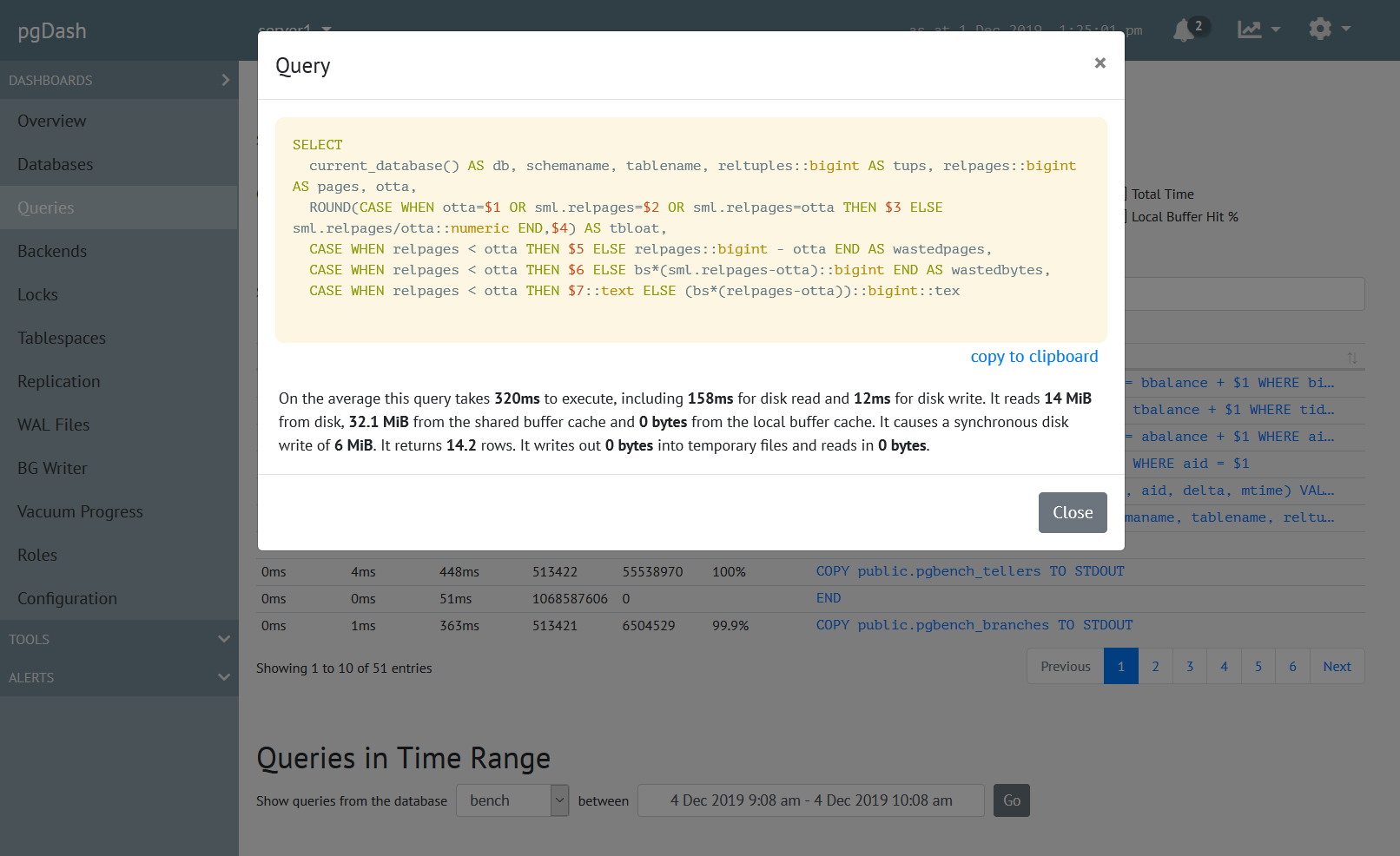

This does work reasonably well in practice, but you’ll need a good monitoring infrastructure, or a dedicated service like pgDash.

Query Parameters

pg_stat_statements does not capture the values of bind parameters passed to queries.

One of the things that the Postgres query planner estimates for selecting an execution plan is the number of rows a condition is likely to filter out. For example if most rows of a table have the value of an indexed column country as “US”, the planner might decide to do a sequential scan of the entire table for the where clause country = "US" , and might decide to use an index scan for country = "UK" since the first where clause is expected to match most rows in the table.

Knowing the actual value of the parameters for which the query execution was slow can help diagnose slow query issues faster.

Slow Query Logging

The simpler alternative is to log slow queries. Unlike a certain other DBMS that makes this easy, PostgreSQL presents us with a bunch of similar-looking configuration settings:

log_statementlog_min_duration_statementlog_min_duration_samplelog_statement_sample_ratelog_parameter_max_lengthlog_parameter_max_length_on_errorlog_duration

These are described in detail in the Postgres documentation. Here is a reasonable starting point:

# next line says only log queries that take longer 5 seconds

log_min_duration_statement = 5s

log_parameter_max_length = 1024

log_parameter_max_length_on_error = 1024

Which results in logs like these:

2022-04-14 06:17:11.462 UTC [369399] LOG: duration: 5.060 ms statement: select i.*, t."Name" as track, ar."Name" as artist

from "InvoiceLine" as i

join "Track" as t on i."TrackId" = t."TrackId"

join "Album" as al on al."AlbumId" = t."AlbumId"

join "Artist" as ar on ar."ArtistId" = al."ArtistId";

If there are too many logs, you can ask Postgres to log only (say) 50% of queries that run longer than 5 seconds:

log_min_duration_sample = 5s

log_statement_sample_rate = 0.5 # 0..1 => 0%..100%

You should of course, read through the docs about what these parameters mean and imply before adding them into your Postgres configuration. Be warned that the settings are quirky and non-intutive.

Execution Plans of Slow Queries

It is generally not sufficient to know that a slow query happened, you’ll also need to figure out why it was slow. For this, you’ll typically first check the execution plan of the query.

auto_explain is another core PostgreSQL extension (again, available on most DBaaS too) that can log the execution plans of queries that have just finished execution. It is documented here.

To enable auto_explain, you’d typically add it to shared_preload_libraries and restart Postgres. Here is a sample starter configuration:

# logs execution plans of queries that take 10s or more to run

auto_explain.log_min_duration = 10s

auto_explain.log_verbose = on

auto_explain.log_settings = on

auto_explain.log_format = json

auto_explain.log_nested_statements = on

# enabling these provide more information, but have a performance cost

#auto_explain.log_analyze = on

#auto_explain.log_buffers = on

#auto_explain.log_wal = on

#auto_explain.log_timing = on

#auto_explain.log_triggers = on

This will cause plans to be logged as JSON format, which can then be visualized in tools like these.

Still-Executing Queries

All the techniques listed above have one thing in common: they produce actionable output only after a query has finished execution. They cannot be used to handle queries that are so slow this one time, that they haven’t finished execution yet.

Each connection to a PostgreSQL server is handled by a backend, specifically a client backend. When such a backend is excecuting a query, it’s state is active. It might also have started a transaction but is then idle, called idle in transaction state.

The pg_stat_activity system view documented here provies a list of all running Postgres backends. You can query this view to get queries that are still running:

SELECT client_addr, query_start, query

FROM pg_stat_activity

WHERE state IN ('active', 'idle in transaction')

AND backend_type = 'client backend';

By the way, without using third-party extensions, there is no way to know the execution plan of a query that is currently being executed by a backend.

Locks

If the execution plan of a slow query does not indicate any obvious issues, the backend executing the query might have been delayed by contended locks.

Locks are obtained either explicitly or implicitly during query execution for a variety of reasons. There is a whole chapter in the Postgres documentation devoted to this.

Logging Locks

Typically, an upper limit on how long to wait is set using the option lock_timeout, usually at the client side. If a query has been waiting this long to acquire a lock, Postgres will cancel the execution of this query and log an error:

2021-01-30 09:35:52.415 UTC [67] psql postgres testdb 172.17.0.1 ERROR: canceling statement due to lock timeout

2021-01-30 09:35:52.415 UTC [67] psql postgres testdb 172.17.0.1 STATEMENT: cluster t;

Let’s say you want to set a lock timeout of 1 minute, but log queries that wait for locks for more than, say 30 seconds. You can do this using:

log_lock_waits = on

deadlock_timeout = 30s

This will create logs like this:

2021-01-30 09:49:22.331 UTC [70] psql postgres testdb 172.17.0.1 LOG: process 70 still waiting for ShareLock on transaction 493 after 30009.004 ms

2021-01-30 09:49:22.331 UTC [70] psql postgres testdb 172.17.0.1 DETAIL: Process holding the lock: 68. Wait queue: 70.

2021-01-30 09:49:22.331 UTC [70] psql postgres testdb 172.17.0.1 CONTEXT: while locking tuple (0,3) in relation "t"

2021-01-30 09:49:22.331 UTC [70] psql postgres testdb 172.17.0.1 STATEMENT: select * from t for update;

The use of deadlock_timeout is not a typo: it is the value that the lock wait logging mechanism uses. Ideally, there should have been something like log_min_duration_lock_wait, but unfortunately, that is not the case.

In case of actual deadlocks, Postgres will abort the deadlocked transactions after deadlock_timeout duration, and will log the offending statements. No explicit configuration is necessary.

2021-01-30 09:55:37.724 UTC [68] psql postgres testdb 172.17.0.1 LOG: process 68 detected deadlock while waiting for ShareLock on transaction 496 after 30007.633 ms

2021-01-30 09:55:37.724 UTC [68] psql postgres testdb 172.17.0.1 DETAIL: Process holding the lock: 70. Wait queue: .

2021-01-30 09:55:37.724 UTC [68] psql postgres testdb 172.17.0.1 CONTEXT: while locking tuple (0,3) in relation "t"

2021-01-30 09:55:37.724 UTC [68] psql postgres testdb 172.17.0.1 STATEMENT: select * from t where a=4 for update;

2021-01-30 09:55:37.725 UTC [68] psql postgres testdb 172.17.0.1 ERROR: deadlock detected

2021-01-30 09:55:37.725 UTC [68] psql postgres testdb 172.17.0.1 DETAIL: Process 68 waits for ShareLock on transaction 496; blocked by process 70.

Process 70 waits for ShareLock on transaction 495; blocked by process 68.

Process 68: select * from t where a=4 for update;

Process 70: select * from t where a=0 for update;

2021-01-30 09:55:37.725 UTC [68] psql postgres testdb 172.17.0.1 HINT: See server log for query details.

2021-01-30 09:55:37.725 UTC [68] psql postgres testdb 172.17.0.1 CONTEXT: while locking tuple (0,3) in relation "t"

2021-01-30 09:55:37.725 UTC [68] psql postgres testdb 172.17.0.1 STATEMENT: select * from t where a=4 for update;

Discovering Current Locks

The entire list of currently granted locks are available from the pg_locks system view. However, it is typically easier to use the function pg_blocking_pids, together with pg_stat_activity, like this:

SELECT state, pid, pg_blocking_pids(pid), query

FROM pg_stat_activity

WHERE backend_type='client backend';

which can show an output like this:

state | pid | pg_blocking_pids | query

---------------------+--------+------------------+-------------------------------------------------

active | 378170 | {} | SELECT state, pid, pg_blocking_pids(pid), query+

| | | FROM pg_stat_activity +

| | | WHERE backend_type='client backend';

active | 369399 | {378068} | cluster "Track";

idle in transaction | 378068 | {} | select * from "Track" for update;

(3 rows)

which indicates that there is one backend which is blocked (the one executing the CLUSTER statement), and that it is being blocked by PID 378068 (which has executed a SELECT..FOR UPDATE but is then idling within the transaction).

About pgDash

pgDash is a modern, in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics.

pgDash provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, diagnostics, alerting, teams and more. Checkout the features here or signup today for a free trial.