Having regular backups of your PostgreSQL database alone is not sufficient for disaster recovery – you need to ensure that the backup files are accessible and healthy if and when required for a restoration procedure. Read on to see some examples of how to setup automated testing of PostgreSQL backups.

Backups Made Using pg_basebackup

The pg_basebackup backups contain the entire data directory for a database cluster. This directory is usually packed up into a tarball, sometimes with an additional tarball for WAL files that were created since the start of the backup.

To test such a pg_basebackup tarball, first unpack the tarball into an

empty directory. If there is a separate WAL file tarball, unpack that into the

pg_wal directory inside the new directory:

$ mkdir backup-test

$ cd backup-test

$ tar --no-same-owner xvf /path/to/base.tar.gz

$ mkdir -p pg_wal

$ cd pg_wal

$ tar --no-same-owner xvf /path/to/pg_wal.tar.gzYou can now start a PostgreSQL server process for this directory:

$ pg_ctl -D path/to/backup-test start(Note: pg_ctl is a command-line tool included in the standard Postgres distribution. It is available everywhere that Postgres itself is, similar to the other included tools like psql and pg_dump. Learn more about pg_ctl here.)

If there is already a PostgreSQL server installed/running on this machine, you’ll probably want to start on a port other than the default 5432:

$ pg_ctl -D path/to/backup-test -o "-p 6000 -k /tmp" startIf everything succeeded so far, you’ll want to check if the data inside your restored database is sane. If you have automated test scripts to run against your database, now would be a good time to launch at least a small set of those tests against this restored database. If not, you can hack together some quick checks using psql:

$ psql -p 6000 -d mydb -o /dev/null -c "select * from users limit 1"The above command does a simple query against a table which should exist. The exit code of psql should tell you if the query was successful or not. Of course, you can run more complex queries, or run a .sql file, or even a separate test script that will connect to this database and run tests.

When you’re done with the testing, you can stop the Postgres server process with:

$ pg_ctl -D path/to/backup-test stopAnd cleanup the whole extracted database cluster directory:

$ rm -rf path/to/backup-testHere is how it looks when it’s all put together:

#!/bin/bash

# exit immediately if any step fails

set -eo pipefail

# fetch the latest backup

# TODO: copy out base.tar.gz and pg_wal.tar.gz of latest backup

# create a directory to work in

BACKUP_DIR=/tmp/backup-test

rm -rf $BACKUP_DIR

mkdir $BACKUP_DIR

# unpack the backup archives

tar -C $BACKUP_DIR --no-same-owner xvf /path/to/base.tar.gz

mkdir -p $BACKUP_DIR/pg_wal

tar -C $BACKUP_DIR/pg_wal --no-same-owner xvf /path/to/pg_wal.tar.gz

# start a new Postgres server for the cluster on port 6000

pg_ctl -D $BACKUP_DIR -o "-p 6000 -k /tmp" start

# perform a simple test

psql -p 6000 -d mydb -o /dev/null -c "select * from users limit 1"

# shutdown the server

pg_ctl -D $BACKUP_DIR stop

# cleanup the files

rm -rf $BACKUP_DIR /path/to/base.tar.gz /path/to/pg_wal.tar.gzBackups Made Using pg_dump

The pg_dump tool (docs) can also be used to create backups – this is more flexible in that you can optionally select the database/schema/tables you want to backup, as opposed to pg_basebackup which is an all-or-nothing process.

With pg_dump, you can generate a single .sql script or a binary .pgdmp

file that contains all the data (and optionally also the DDL statements for creating

the tables/indexes etc.). To restore such a file, you need to connect to a live

database server and run the SQL commands inside the .sql/.pgdmp file. While you can

use the regular psql to run the .sql file, you’ll need to use the pg_restore

command (docs) to

run the .pgdmp file.

To test such backups, first we fetch the file and then create a new, empty database cluster:

$ rm -rf path/to/backup-test

$ pg_ctl -D path/to/backup-test initdband start a PostgreSQL server on it, listening on port 6000 as before:

$ pg_ctl -D path/to/backup-test -o "-p 6000 -k /tmp" startIt is possible to generate pg_dump files that are fully self-contained, but it is also possible to generate them to be not so. Therefore, depending on how the dump was generated, some setup steps might be required:

- create a database

- create tables, indexes etc.

- grant privileges

Once that is done, you can either use psql or pg_restore to bring the data back to life:

# for .sql files

$ psql -p 6000 -h /tmp -v ON_ERROR_STOP=1 -1 -b -f path/to/dump.sql

# for .pgdmp files

$ pg_restore -p 6000 -h /tmp -d mydb -C -1 -f path/to/dump.pgdmpAs before, at this point, tests can be carried out to ensure sanity of the restored data.

Here is how it looks, all put together:

#!/bin/bash

# exit immediately if any step fails

set -eo pipefail

# fetch the latest dump

# TODO: copy out the dump.sql or dump.pgdmp of latest backup

# create an empty database cluster

BACKUP_DIR=/tmp/backup-test

rm -rf $BACKUP_DIR

pg_ctl -D $BACKUP_DIR initdb

# start a new Postgres server for the cluster on port 6000

pg_ctl -D $BACKUP_DIR -o "-p 6000 -k /tmp" start

# TODO: perform any specific setup steps here

# restore the file, .sql:

psql -p 6000 -h /tmp -v ON_ERROR_STOP=1 -1 -b -f path/to/dump.sql

# or .pgdmp:

pg_restore -p 6000 -h /tmp -d mydb -C -1 -f path/to/dump.pgdmp

# perform a simple test

psql -p 6000 -d mydb -o /dev/null -c "select * from users limit 1"

# shutdown the server

pg_ctl -D $BACKUP_DIR stop

# cleanup the files

rm -rf $BACKUP_DIR /path/to/dump.*Watch Out For Triggers

While restoring a pg_dump backup, data gets inserted into tables, much like when an application does it. If you have triggers that connect to external services to notify of row insertions, it’d be best to disable them during the restore procedure.

When invoking pg_dump to emit sql files, you can use the option

--disable-triggers to tell pg_dump to generate script to disable the

triggers while inserting.

When invoking pg_restore on a database that already has triggers, you

can use the --disable-triggers in pg_restore to achieve the same effect.

PITR Testing

Point-in-time-recovery (PITR) in Postgres relies on a full backup taken using pg_basebackup, and a sequence of WAL files from that point up until the point in time when you want to recover to. Testing of PITR therefore involves testing the full backup as well as the subsequent WAL files.

For automated backup testing, we don’t have a specific recovery target. All

archived WAL files from the last backup onwards until the most recent one

should be tested. The easiest way to test this is to follow the same steps as

for the pg_basebackup test method, with just one additonal step. After

unpacking the latest backup, fetch all relevant and available WAL files and

place them into pg_wal before starting the Postgres server. Specifically:

#!/bin/bash

# exit immediately if any step fails

set -eo pipefail

# fetch the latest backup

# TODO: copy out base.tar.gz and pg_wal.tar.gz of latest backup

# create a directory to work in

BACKUP_DIR=/tmp/backup-test

rm -rf $BACKUP_DIR

mkdir $BACKUP_DIR

# unpack the backup archives

tar -C $BACKUP_DIR --no-same-owner xvf /path/to/base.tar.gz

mkdir -p $BACKUP_DIR/pg_wal

tar -C $BACKUP_DIR/pg_wal --no-same-owner xvf /path/to/pg_wal.tar.gz

# --> this is the new extra step <--

# TODO: fetch all WAL files from the WAL archive since the last

# backup, and place them in $BACKUP_DIR/pg_wal

# start a new Postgres server for the cluster on port 6000

pg_ctl -D $BACKUP_DIR -o "-p 6000 -k /tmp" start

# perform a simple test

psql -p 6000 -d mydb -o /dev/null -c "select * from users limit 1"

# shutdown the server

pg_ctl -D $BACKUP_DIR stop

# cleanup the files

rm -rf $BACKUP_DIR /path/to/base.tar.gz /path/to/pg_wal.tar.gzThis should verify whether both the last backup and subsequent WAL files are good, so that they can be used for PITR if and when needed.

About pgDash

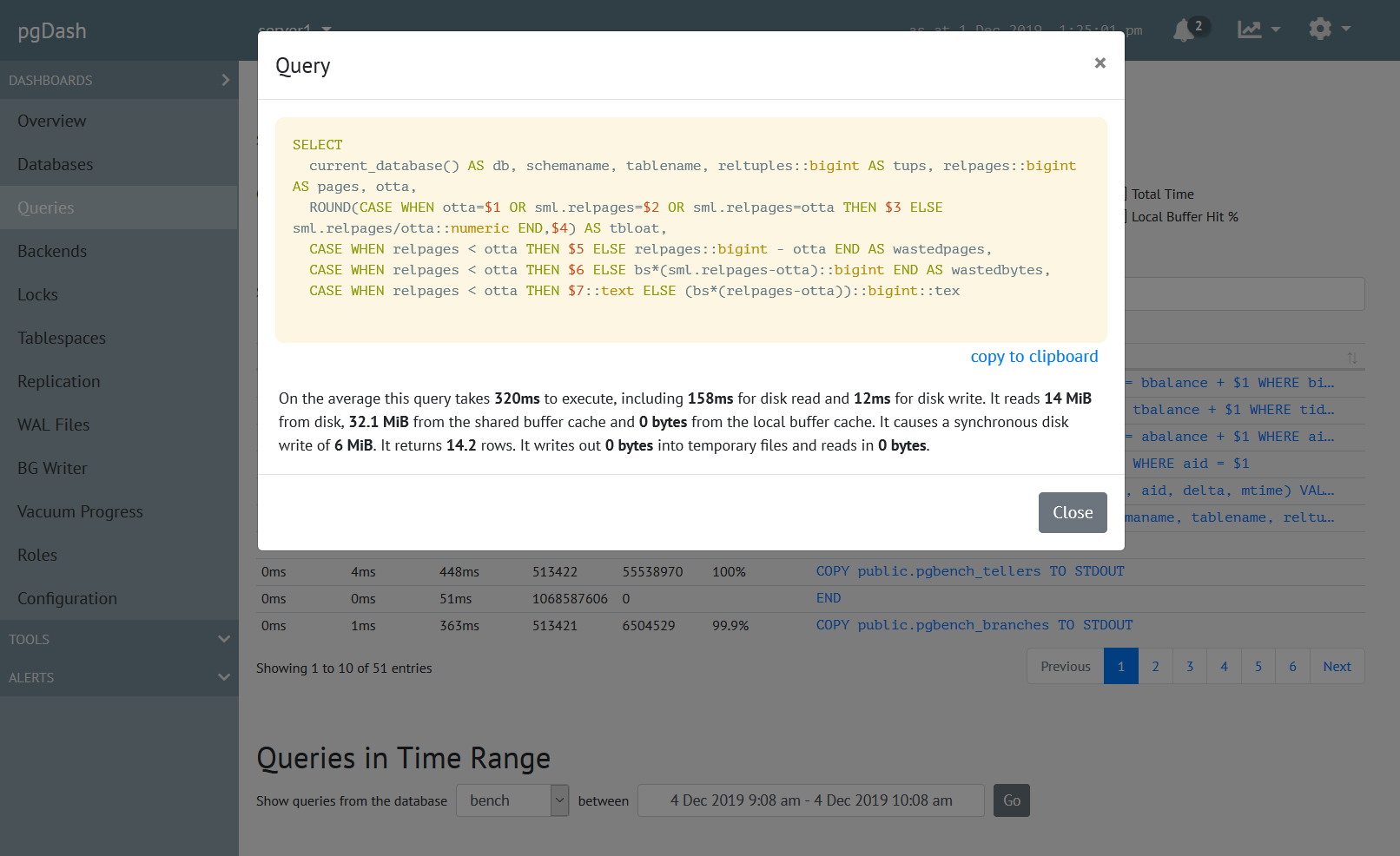

pgDash is a modern, in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics.

pgDash provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, diagnostics, alerting, teams and more. Checkout the features here or signup today for a free trial.