What metrics of your PostgreSQL deployment should you be monitoring? This series of blog posts aims to provide a minimal, starting set of essential monitoring actions that you should implement to ensure the health and stability of your Postgres servers.

This is the second part of a blog series, and covers database-level parameters. The first one covered cluster-level parameters.

Part 2: Database Level

In this part, we look at metrics and information that should be monitored for each important database that you use.

Most of the SQL queries listed below should be run while connected to the database in question.

1. Connected Clients

Connected clients take up OS and system resources. Postgres has a process-per-client architecture, and too many clients can slow down query response times for everyone. Consider using PgBouncer or pgpool to reduce the number of connections that the Postgres server will have to service.

Keep an eye on changes in baseline after application updates and surges in connections due to increased traffic.

Action: Monitor the maximum number of connected clients over each day/week, investigate changes in trend.

How To:

-- returns the number of clients connected for each database

SELECT datname, count(*)

FROM pg_stat_activity

GROUP BY datname;2. Size

The actual disk size used by the database has to be monitored. In most cases, the database size keeps on growing, so it is the growth rate that is more interesting. Again, be wary of increased growth rate after new application releases.

Action: Monitor the growth of the size of the database over each week/month, investigate trends, re-provision.

How To:

-- returns the size for each database

SELECT datname, pg_size_pretty(pg_database_size(datname))

FROM pg_database;3. Table Bloat Across All Tables

Because of Postgres’ MVCC architecture, older versions of rows lie around in the physical data files for every table, and is termed bloat. The operation to clear out obsolete row versions is called vacuum. Postgres runs a background process called autovacuum, which picks up candidate tables (based on configurable parameters) and vacuums them for you.

Bloat slows down table operations and wastes disk space, and can run away even with autovacuum. Monitoring of bloat, as an absolute number of bytes as well as a percentage (of dead data to total data), is required. This can be done at the database level as a total across all tables, with the possibility to drill down into the “most bloated tables”.

Action: Continuously monitor total bloat as bytes and percentage, alert if values exceed a set threshold.

How To:

Use check_postgres or pgmetrics to get bloat estimates. See the wiki for more info.

4. Index Bloat Across All Indexes

Bloated indexes can slow down inserts and reduce lookup performance. Monitor the bloat of indexes as both an absolute value (number of bytes) and as a percentage. Indexes will have to be rebuilt when they become too bloated.

Action: Continuously monitor total bloat as bytes and percentage, alert if values exceed a set threshold.

How To:

Use check_postgres or pgmetrics to get bloat estimates. See the wiki for more info.

5. Long Running Transactions

Transactions open for too long are not good news for PostgreSQL. Long-running transactions can cause retention of WAL files, can hang on to locks and prevent vacuum. Applications should be designed to keep transaction durations as low as possible.

A backend running a long-running transaction can be killed with pg_cancel_backend() and pg_terminate_backend() functions.

Action: Continuously monitor the count of transactions that have been running for more than a set time duration, alert if values exceed a set threshold.

How To:

-- returns the count of transactions that have been running for more than a day

SELECT count(*)

FROM pg_stat_activity

WHERE xact_start < now() - '24 hours'::interval;6. Deadlocks

In PostgreSQL, backends place locks on rows and tables implicitly as it goes about executing queries that modify rows. Queries can also place explicit locks (like SELECT .. FOR UPDATE). Just like in multi-threaded programming, two transactions placing locks, either implicitly or explicitly, in opposing order can result in a deadlock.

PostgreSQL will detect deadlocks and will rollback one of the transactions involved (All locks held by a transaction are released when it is committed or rolled back). Details will be logged in the PostgreSQL logging destination.

Applications should be designed to avoid the possibility of deadlock. They can also choose to retry a transaction in case of deadlock.

Action: Monitor deadlock counts over each day/week, redesign application to reduce count, investigate changes.

How To:

Occurrences of deadlocks, along with additional information, are logged in the PostgreSQL log file. Use pgmetrics, pgbadger or other PostgreSQL-specific log processing tools to extract this information.

7. Oldest Vacuum

Regular vacuuming of tables, either with autovacuum, or with scheduled jobs, or manually is a must for keeping table operations fast. Vacuums can fail for various reasons though, including long running transactions, inactive replication slots etc.

Since regular vacuuming of tables is quite important in the Postgres world, it is best to regularly check if all tables have been vacuumed at least once in a set interval.

And although they tend to be out of sight and out of mind, the system catalog tables should also be vacuumed.

Action: Continuously check if any table has not been vacuumed in the last set number of hours/days, alert if any.

How To:

-- returns the top 5 tables vacuumed least recently

SELECT schemaname || '.' || relname, now()-last_vacuum

FROM pg_stat_all_tables

ORDER BY last_vacuum ASC NULLS LAST LIMIT 5;

-- returns all tables that have not been vacuumed in the last 7 days

SELECT schemaname || '.' || relname, now()-last_vacuum

FROM pg_stat_all_tables

WHERE last_vacuum < now() - '7 days'::interval;8. Oldest Analyze

In order to execute your SELECT queries, the query planner prepares an execution plan that describes which indexes and tables have to be read, and how. To come up with an efficient execution plan, the planner needs to have statistics about the distribution of values, sizes of tuples and so on. Such statistical information about the data in a table are collected and maintained by analyze operations. Tables with up-to-date statistics result in faster queries and lesser I/O.

The autovacuum process mentioned above typically also runs ANALYZE on the table it vacuums to keep this information updated. However, this alone might not provide sufficient analyze coverage for your tables. You’ll want to supplement by running ANALYZE as scheduled jobs or after significant table mutations.

In the interest of query performance, it is best to continuously check whether all tables have been analyzed at least once in a set interval.

Action: Continuously check if any table has not been analyzed in the last set number of hours/days, alert if any.

How To:

-- returns the top 5 tables analyzed least recently

SELECT schemaname || '.' || relname, now()-last_analyze

FROM pg_stat_all_tables

ORDER BY last_analyze ASC NULLS LAST LIMIT 5;

-- returns all tables that have not been analyzed in the last 7 days

SELECT schemaname || '.' || relname, now()-last_analyze

FROM pg_stat_all_tables

WHERE last_analyze < now() - '7 days'::interval;Collecting These Metrics

The sections above provide SQL statements to extract the needed metrics from a running Postgres server. If you’d rather not write the scripts yourself, check out the open source tool pgmetrics. It can collect the metrics above, and more, and report them in text and JSON formats.

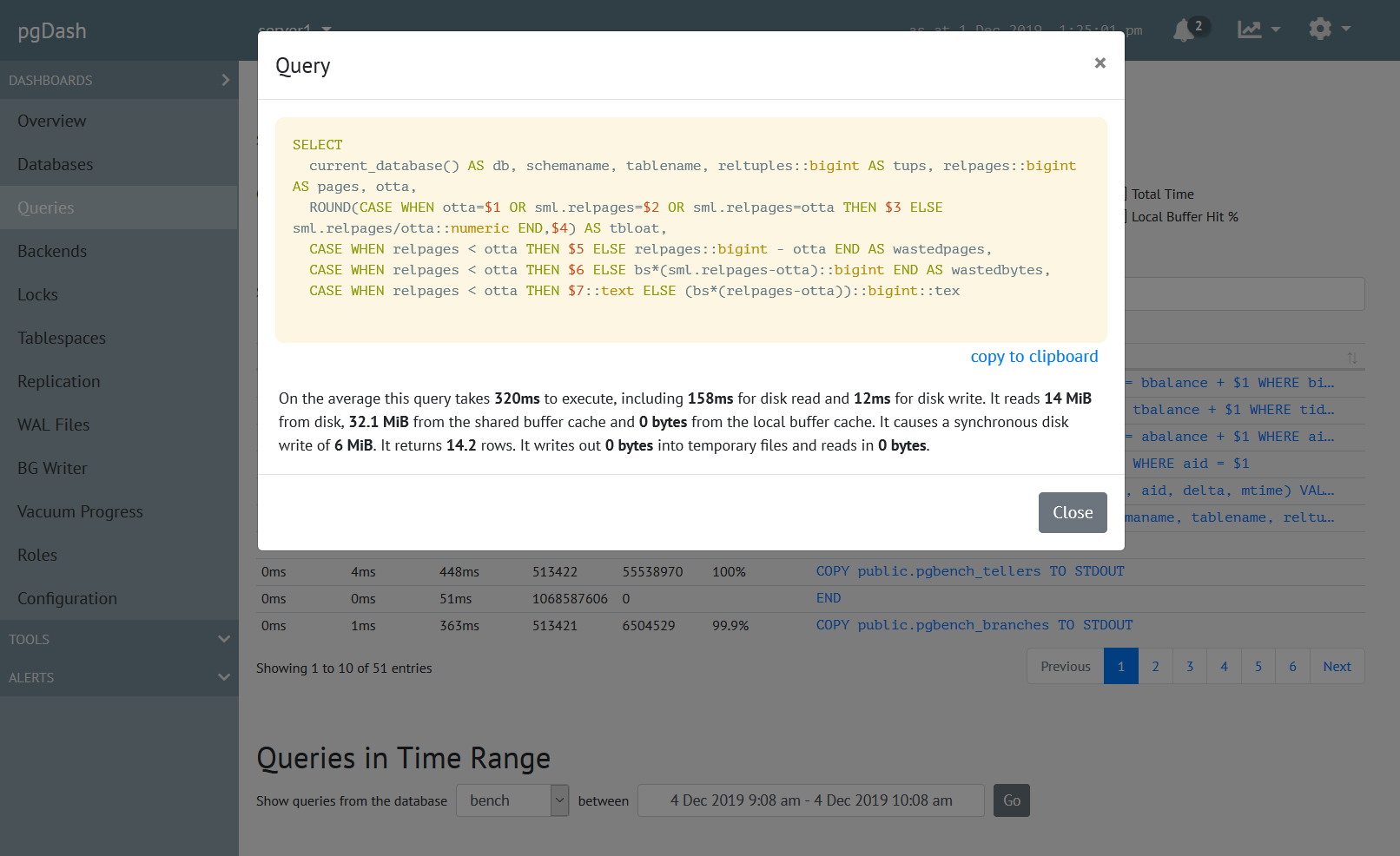

You can directly send the pgmetrics reports to our commercial offering, pgDash, which stores and processes these reports to display graphs and perform alerting.

Next Up

The next part in this series will cover table-level, index-level and system-level metrics. Stay tuned!

About pgDash

pgDash is a modern, in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics.

pgDash provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, diagnostics, alerting, teams and more. Checkout the features here or signup today for a free trial.