Horizontal scaling is the art and science of distributing data across multiple servers without compromising data integrity, transaction safety and query performance. Read on for a roundup of the features and capabilities of PostgreSQL that you can use to scale out your database deployment.

Read Replicas

A read replica typically refers to a “standby” server that redundantly duplicates all the data on a primary server, stays continuously up-to-date with the primary server, and allows clients to query (but not update) the data it contains. In Postgres world, this type of server is called a hot standby.

A PostgreSQL server normally starts up, accepts changes from clients via SQL commands, and generates a change feed in the form of a series of WAL (write ahead log) records. (These records then get checkpointed into actual row/column data.) A PostgreSQL standby server starts up and stays in what is known as recovery mode. In recovery mode, the Postgres server does not accept changes via SQL commands, but accepts only WAL records generated by another server as input. These WAL records are then replayed, and consequently the same changes that are happening on the primary server are recreated on the standby server.

The process of collecting newly generated WAL files, copying them over to a standby server, and placing them in the appropriate location on the standby’s data directory, is called log shipping in Postgres jargon. The most popular technique of log shipping is streaming replication.

A simple standby server does not allow regular client connections (a “simple” standby is called a warm standby in Postgres world):

$ psql -h /tmp -p 6001 postgres

psql: error: connection to server on socket "/tmp/.s.PGSQL.6001" failed: FATAL: the database system is not accepting connections

DETAIL: Hot standby mode is disabled.

But it stands to reason that read-only queries that do not update the data in any way may be allowed, and that is indeed what a hot standby allows. To make a regular standby into a hot standby, change this configuration and restart the Postgres server:

hot_standby = on

Clients can now connect to the hot standby server:

$ psql -h /tmp -p 6001 postgres

psql (15.1 (Debian 15.1-1.pgdg110+1))

Type "help" for help.

postgres=# select pg_is_in_recovery();

pg_is_in_recovery

-------------------

t

(1 row)

There are however, some caveats about Postgres’ hot standby feature:

- Standby servers replicate the entire data on the primary server – called physical replication in Postgres jargon. The “entire data” consists of all the databases living on the primary server. Physical replication is an all-or-nothing deal, there is no way to replicate only a subset of databases using this approach.

- Temporary tables cannot be created – creation of tables, even temporary ones, require updation of system catalogs

- Queries that require row locks and some other types of locks are not allowed

- Prepared transactions are not allowed

- Certain changes on the primary (like a vacuum) can lead to cancellation of query running on a hot standby (which needed the rows that just got vacuumed in the primary)

- More in the PostgreSQL docs

That said, physical replication feature of Postgres has been around for a long time now, is heavily used in production everywhere, is rock solid and well-documented. It is available in the standard PostgreSQL installation, no other extensions or tools required. It’s gotchas and their workarounds are only a web search away. A hot standby can serve not only as a read replica, but also as a failover candidate in a HA setup.

Multiple Read Replicas

Multiple read replicas are often used to get the data nearer to their place of use inorder to reduce application query latency. With PostgreSQL, you can have multiple standby servers for a single primary server, or cascade standbys from another standby.

There are no additional settings required for connecting multiple standbys to a primary, or for cascading another standby from an existing standby. Do check however, that the connection limits, wal sender process limits and the system resources are high enough to support multiple standbys.

Read more here.

Delayed Replication

Having a replica that stays a few hours or even days behind the current primary database state can be an effective way to mitigate the results of disastrous queries (“oops I forgot the where clause to the delete when running on prod”). Lost or overwritten data can be manually queried out from a delayed replica, and suitable corrective actions can be taken.

Without a delayed replica, fixing such an issue would require restoring the latest backup, PITR-ing up to the time just before the command execution, then fetching data from that point. On the downside though, having a fully operational server just to recover from such mistakes maybe too expensive.

If you do need it however, it’s fairly simple to configure your standby to be a delayed replica:

# add this to your standby to make it lag behind the primary by 4 hours

recovery_min_apply_delay = '4h'

More documentation here.

Logical Replication

Physical replication aims to replicate the “physical”, on-disk layout of the primary server’s data files by relaying WAL records that essentially encode the deltas of the file contents. Another approach to replication would be to record and replay the SQL-level transactions. For example, if we are interested in replicating a single isolated table to another server, it’d be sufficient to record and replay all the INSERT/UPDATE/DELETE commands, in a serializable order, that are run on it. Indeed, it would be faster to transmit “UPDATE t SET a=a+1” than to copy over the on-disk changes to a million rows.

This type of replication is called logical replication, and was introduced in core PostgreSQL in v10. It has proven quite helpful for a variety of use cases, most importantly perhaps for upgrades of major Postgres versions without downtime. The improvements since it’s introduction in v10 (especially in v14 and v15 ) have made it more powerful and suitable for many more use cases. Read more about logical replication in the official PostgreSQL docs.

Logical replication in Postgres works like this: A publication object, with a unique name, is setup at the source server. This publication refers to a list of existing tables, and acts as a “publisher” of changes that happen to these tables. On another server, a subscription object is setup that will read the change feed from a specific publication object on a specific server, and replay the changes to local tables of the same name and column specifications.

Here is how it looks like in practice (note that wal_level must be set to logical on both servers first):

On the source server:

postgres=# create table tbl1 (a int primary key);

CREATE TABLE

postgres=# insert into tbl1 (a) select generate_series(1, 100);

INSERT 0 100

postgres=# create publication pub1 for table tbl1;

CREATE PUBLICATION

On the destination server:

postgres=# create table tbl1 (a int primary key);

CREATE TABLE

postgres=# create subscription sub1 connection 'host=/tmp port=6000 dbname=postgres' publication pub1;

NOTICE: created replication slot "sub1" on publisher

CREATE SUBSCRIPTION

postgres=# select count(*) from tbl1;

count

-------

100

(1 row)

Logical replication also does have some limitations, however, like the lack of DDL replication. You can read more about some of these limitations here. Incorrectly setup logical replication can also be problematic, since Postgres can be made to replicate out data that violates the transaction atomicity and referential integrity in the source server.

Replicating Only Some Tables

A publication can be setup to publish changes to all tables (the list of tables present at the time of creation of the publication, tables created afterwards are not automatically included), or a specified list of tables:

-- include all tables present at time of creation of publication

create publication pub2 for all tables;

-- include only specified list of tables

create publication pub3

for table tbl3, tbl4, tbl5;

PostgreSQL v15 added the ability to specify all tables in one or more schemas too:

-- Postgres v15+ only

create publication pub4

for tables in schema schema1, schema2;

The list of tables in a publication can be changed at a later time using ALTER PUBLICATION.

Read more about publications here and here.

Replicating Only Some Rows of a Table

Postgres v15 also added the powerful capability of publishing only the selected rows of a table. You can now specify a row filter for a publication, and only the rows that satisfy this expression will be considered for replication. Read more about row filters here.

Row filter expressions cannot be too complicated (for example, it cannot include calls to stored functions), and are more like table-level CHECK constraints than query-level WHERE clauses in that regard. Here is an example that publishes only rows where a certain column’s value is even:

postgres=# create table tbl2 (a int primary key);

CREATE TABLE

postgres=# insert into tbl2 (a) select generate_series(1, 100);

INSERT 0 100

postgres=# create publication pub2 for table tbl2 where (a % 2 = 0);

CREATE PUBLICATION

On the subscription side, you can see that only half the rows are present:

postgres=# create table tbl2 (a int primary key);

CREATE TABLE

postgres=# create subscription sub2 connection 'host=/tmp port=6000 dbname=postgres' publication pub2;

NOTICE: created replication slot "sub2" on publisher

CREATE SUBSCRIPTION

postgres=# select count(*) from tbl2;

count

-------

50

(1 row)

Replicating Only Some Columns of a Table

Starting with Postgres v15 again, it is possible to replicate only a subset of the columns of a table. Along with the table name, the list of columns also can be specified like this:

postgres=# create table tbl3 (a int primary key, b text, c text);

CREATE TABLE

postgres=# create publication pub3 for table tbl3 (a, c);

CREATE PUBLICATION

postgres=# insert into tbl3 (a, b, c) values (1, 'hello', 'world');

INSERT 0 1

On the subscription side, the table which receives the replicated data has only the subset of columns:

postgres=# create table tbl3 (a int primary key, c text);

CREATE TABLE

postgres=# create subscription sub3 connection 'host=/tmp port=6000 dbname=postgres' publication pub3;

NOTICE: created replication slot "sub3" on publisher

CREATE SUBSCRIPTION

postgres=# select * from tbl3;

a | c

---+-------

1 | world

(1 row)

The row filter and column list can be specified together for a publication, so as to replicate only a subset of rows and columns of a table. All that with just a couple of simple commands, and no external tooling!

Replicating Only Some Operations on a Table

Rows of a table can get inserted, updated, deleted and the table itself can be truncated. The publisher of a table can be configured to include only one or more of these 4 operations into the replication data.

For example, here is how to setup a publisher that replicates only inserts and updates to a table:

postgres=# create table tbl4 (a int primary key, b text);

CREATE TABLE

postgres=# create publication pub4 for table tbl4 with (publish = 'insert, update');

CREATE PUBLICATION

postgres=# select * from tbl4;

a | b

---+---

(0 rows)

Now let’s setup and start the subscriber:

postgres=# create table tbl4 (a int primary key, b text);

CREATE TABLE

postgres=# create subscription sub4 connection 'host=/tmp port=6000 dbname=postgres' publication pub4;

NOTICE: created replication slot "sub4" on publisher

CREATE SUBSCRIPTION

Then let’s insert, update and delete some rows on the publisher:

postgres=# select * from tbl4;

a | b

---+---

(0 rows)

postgres=# insert into tbl4 (a, b) values (1, 'jello');

INSERT 0 1

postgres=# insert into tbl4 (a, b) values (2, 'world');

INSERT 0 1

postgres=# update tbl4 set b='hello' where a=1;

UPDATE 1

postgres=# delete from tbl4 where a=2;

DELETE 1

postgres=# select * from tbl4;

a | b

---+-------

1 | hello

(1 row)

And see what happens on the subscriber:

postgres=# select * from tbl4;

a | b

---+-------

2 | world

1 | hello

(2 rows)

The subscriber’s table contains the row that was deleted from the publisher, since the delete operations were not included in the changes sent across.

This also illustrates why improper use of logical replication can break transaction atomicity: if a row was created and then deleted within a single transaction on the publisher side, the subscriber will still end up with a row that was never seen by any other transaction on the publisher side.

Note that initially when the subscription is started, all rows from the source are copied over to the destination. Further replication is subject to the restrictions specified.

Muti Master and Bi-directional Replication

The terms “multi-master”, “multi-writer” and “bi-directional replication” refer to a deployment with two or more linked servers, any of which can consistently update a shared set of databases. A client application would be able to perform updates from any server, and the changes would be replicated to other servers, while maintaining consistency and integrity of the entire data.

As such, there is no “multi-master” mode of replication in core PostgreSQL. There are third-party extensions and tools that implement generic multi-master replication, but they are not as widely available or popular as PostgreSQL itself.

Logical replication can go a long way to implementing, in a schema-specific way, the goals you want to realize using a generic multi-master solution. Publications and subscriptions can be setup on any stock PostgreSQL server, and replication in any direction can be achieved easily. Conflict resolution must be handled at schema design and application level, as opposed to any features provided by a generic multi-master solution.

Synchronous Replication

By default, when a transaction is committed in PostgreSQL, it writes out the required WAL records and waits for the flush of this data to the disk. When the server is a primary replicating to one or more standby servers, this behavior can be further tightened to reduce the risk of data loss even more. This can be achieved by configuring the settings synchronous_commit and synchronous_standby_names.

The value of remote_write for synchronous_commit makes Postgres wait for the standby to write the WAL records to its disk before replying to the application that the commit has completed. The application_name of the standby that has to receive the WAL records is specified with synchronous_standby_names, like this:

synchronous_commit = remote_write

synchronous_standby_names = mystandby1

A remote_write does not necessarily mean that a query started on the standby right after the commit completes can see the changes contained in that commit. If such a guarantee is needed, use a value of remote_apply for synchronous_commit:

synchronous_commit = remote_apply

synchronous_standby_names = mystandby1

It is even possible to setup a quorum commit, that is to say, at least k of n nodes must have received the WAL records for the primary’s commit to succeed:

synchronous_commit = remote_write

synchronous_standby_names = ANY 2 (mystandby1, mystandby2, mystandby3)

What is probably most interesting about synchronous_commit is the ability to specify it at the transaction level rather than as a global setting. Enabling it for only certain transactions, like for example one which updates account balances, brings extra safety for operations when required.

BEGIN;

SET synchronous_commit = 'remote_write';

-- use the global setting for synchronous_standby_names

-- update data here

COMMIT;

Accessing Tables Across Servers

Not all data distributed across servers may need to be replicated or updated in real time. Data might be mostly static, for reference, lookup, or historical and so on. Such data can be accessed from main OLTP/OLAP servers using foreign data wrappers (FDW).

FDWs allow you to work with “foreign data”, which may reside anywhere external to the Postgres server. The ability to work with data from another Postgres server is implemented by postgres_fdw, an extension available in core PostgreSQL.

Postgres FDW

Here is how Postgres FDW looks in practice. Assume there is a source database with a table in it, like this:

srcdb=# create table srct (a int primary key);

CREATE TABLE

srcdb=# insert into srct (a) select generate_series(1, 100);

INSERT 0 100

At a destination server, you can setup a foreign table srct, which acts a proxy table for the actual srct table that lives in our source database:

destdb=# create extension postgres_fdw;

CREATE EXTENSION

destdb=# create server src foreign data wrapper postgres_fdw options (host '/tmp', port '6000', dbname 'srcdb');

CREATE SERVER

destdb=# create user mapping for current_user server src;

CREATE USER MAPPING

destdb=# import foreign schema public limit to (srct) from server src into public;

IMPORT FOREIGN SCHEMA

destdb=# select count(*) from srct;

count

-------

100

(1 row)

The foreign table takes up no space and contains no data – it simply serves as a placeholder to refer to the actual table living elsewhere. The destination Postgres server’s postgres_fdw extension will establish and maintain a connection to the source Postgres server, translating every query involving the foreign table into appropriate network calls.

The foreign table can work seamless with regular local tables, like in this join:

destdb=# create table destt (b int primary key, c text);

CREATE TABLE

destdb=# insert into destt (b,c) values (10,'foo'), (20,'bar');

INSERT 0 2

destdb=# select a,b,c from srct join destt on srct.a = destt.b;

a | b | c

----+----+-----

10 | 10 | foo

20 | 20 | bar

(2 rows)

The main work of the FDW is to push down the work to the remote server as much as possible and to minimize the amount of data sent back and forth between the two servers. For example, you would want the remote server to process a LIMIT clause, rather than fetching all the rows over then applying a LIMIT clause locally. However, given the complexity of SQL as well as PostgreSQL’s query planner and executor, this is no easy task. The efficiency keeps improving each version, but some queries might take a little too much time or work_mem that you would expect.

Materialized Views + Foreign Data Wrappers

Depending upon your use case, combining materialized views with FDW can offer a reasonable balance between replicating a full table and having it totally remote (foreign). The materialized view can effectively function as a local cache, which in turn can be used along with local-only tables for local-grade performance.

destdb=# create materialized view destmv as select a,b,c from srct join destt on srct.a = destt.b;

SELECT 2

destdb=# select * from destmv;

a | b | c

----+----+-----

10 | 10 | foo

20 | 20 | bar

(2 rows)

The “cache” can be refreshed anytime, peridocally or otherwise, with a regular “REFRESH MATERIALIZED VIEW” command. As a bonus, (local) indexes can be defined on the view to further speed up queries.

Distribute Rows of a Table Across Servers

Sharding of rows of a single table across multiple servers while presenting the unified interface of a regular table to SQL clients is perhaps the most sought-after solution to handling big tables. This approach keeps the applications simpler, and keeps DBAs working harder!

Splitting tables into parts so that queries and work with only relevant rows, hopefully in parallel, is the core principle behind sharding. PostgreSQL v10 introduced the partitioning feature, which has since then seen many improvements and wide adoption. Read more about partitioning here.

Vertically scaling using paritioning involves creating partitions in different tablespaces (on different disks). Horizontal scaling involves combining partitioning and FDW.

Partitioning + FDW

Going with the example from the Postgres documentation, let’s create the partition root table measurement having one local partition table and one foreign partition table:

destdb=# CREATE TABLE measurement (

city_id int not null,

logdate date not null,

peaktemp int,

unitsales int

) PARTITION BY RANGE (logdate);

CREATE TABLE

destdb=# CREATE TABLE measurement_y2023

PARTITION OF measurement

FOR VALUES FROM ('2023-01-01') TO ('2024-01-01');

CREATE TABLE

destdb=# CREATE FOREIGN TABLE measurement_y2022

PARTITION OF measurement

FOR VALUES FROM ('2022-01-01') TO ('2023-01-01')

SERVER src;

CREATE FOREIGN TABLE

The foreign table is only a proxy, so the actual table itself must be present on the foreign server:

srcdb=# CREATE TABLE measurement_y2022 (

city_id int not null,

logdate date not null

CHECK (logdate >= '2022-01-01' and logdate <= '2023-01-01'),

peaktemp int,

unitsales int

);

CREATE TABLE

We can now insert rows into the root table and have it routed to the appropriate partition. You can see that the SELECT query performs both a local scan and a foreign scan and combines the results.

destdb=# insert into measurement (city_id, logdate, peaktemp, unitsales)

values (1, '2022-01-03', 66, 100), (1, '2023-01-03', 67, 300);

INSERT 0 2

destdb=# select * from measurement;

city_id | logdate | peaktemp | unitsales

---------+------------+----------+-----------

1 | 2022-01-03 | 66 | 100

1 | 2023-01-03 | 67 | 300

(2 rows)

destdb=# explain select * from measurement;

QUERY PLAN

-------------------------------------------------------------------------------------------------

Append (cost=100.00..219.43 rows=3898 width=16)

-> Foreign Scan on measurement_y2022 measurement_1 (cost=100.00..171.44 rows=2048 width=16)

-> Seq Scan on measurement_y2023 measurement_2 (cost=0.00..28.50 rows=1850 width=16)

(3 rows)

However, both partitioning and foreign tables still have implementation limitations in PostgreSQL, which means that this setup works satisfactorily only for simple tables and basic queries.

Citus 11

Microsoft released the fully open source version of Citus, v11, a few months ago. It is developed and available as a regular Postgres extension that can be installed into a regular Postgres deployment.

It offers many features over and above what can be achieved with partitioning and FDW, including colocating partitions of related tables and concurrent shard rebalancing. Find more here

About pgDash

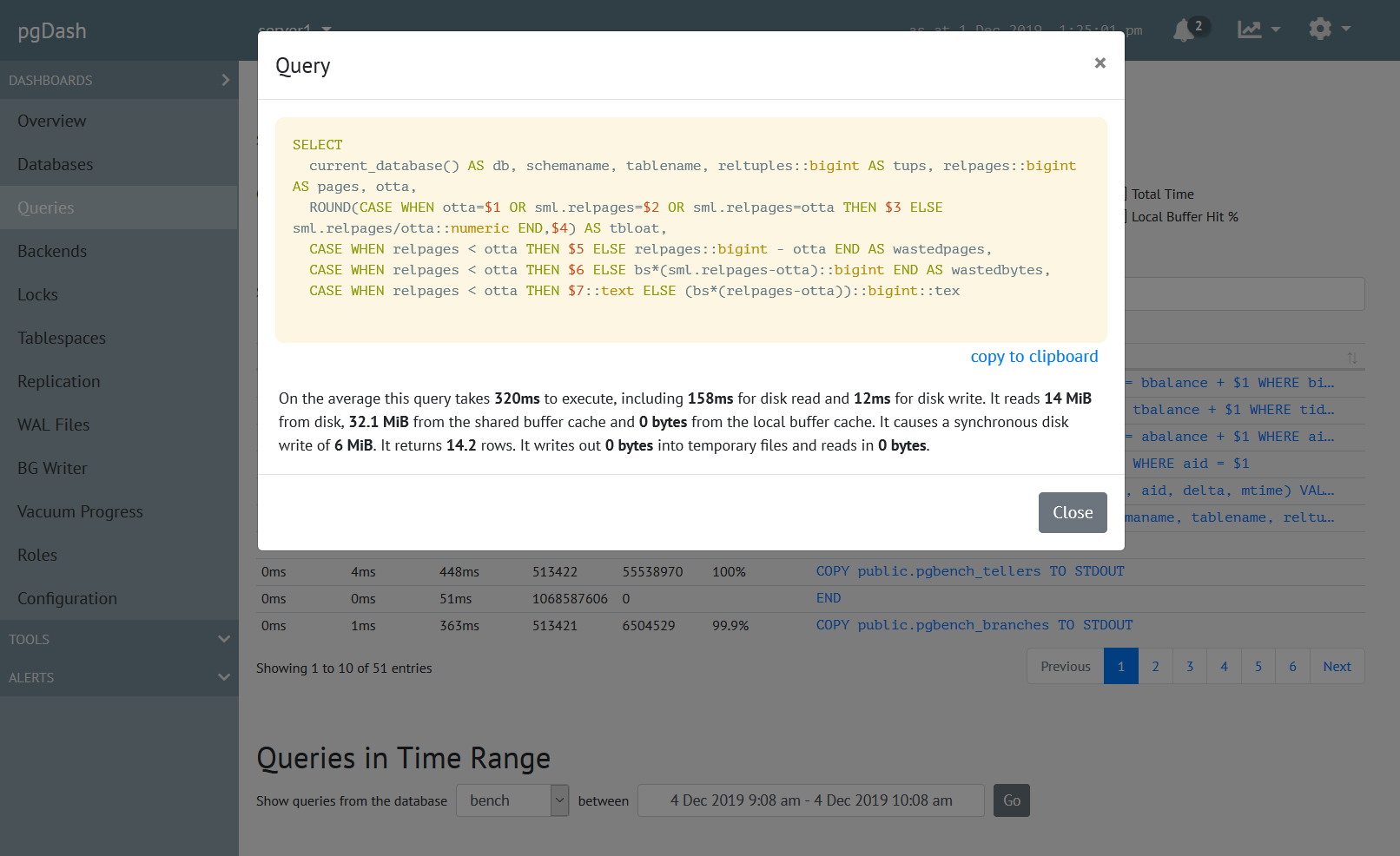

pgDash is a modern, in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics.

pgDash provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, diagnostics, alerting, teams and more. Checkout the features here or signup today for a free trial.