The Write Ahead Logging subsystem in PostgreSQL is at the core of how it

delivers its promise of ACID transactions. A very visible effect of this

architecture is a large number of 16 MB files lying around in a directory

called pg_wal. Sometimes though, these files have a habit of accumulating and

eating up lots of disk space.

Read on to learn about why this happens, whether you should be concerned, and what you can do about it.

The Write Ahead Logging System

Nearly all modern RDBMS use write-ahead logging to ensure that the relational data it is entrusted with is consistent and safe, even in the event of hardware or software failures. Write-ahead logging involves maintaining an append-only log of all changes happening to the on-disk files that store the relational data. In Postgres, these log files are called Write Ahead Log files, or simply WAL files.

WAL files in PostgreSQL are all 16 MB in size (by default, though it is rarely

set to different value in practice) and live in a subdirectory called pg_wal

under the data directory of the PostgreSQL cluster. As transactions that modify

data happen on the server, WAL files keep getting generated. There is however,

no direct relation between the number of WAL files and the number of

transactions. The only real takeaway is that write-heavy workloads will result

in the creation of more WAL files. A sudden increase in writes will result in

a sudden increase in WAL file count.

The WAL files that are generated need to be retained for a while, for one of the 3 reasons listed later on in this article. The older ones get deleted when they are no longer required. However, a few old WAL files are retained even if they are eligible for deletion – this is for performance reasons, as writing and syncing a brand new zero-filled 16 MB file is costlier than renaming and reusing an existing file of the correct size.

The creation of WAL files and deleting of old WAL files is an automatic process in PostgreSQL and does not require any setup. There are however, some configuration settings that influence the processes that do this, and tuning these can be beneficial for your deployment.

On a typical Postgres server running with default values you can expect to see the total size of WAL files to be at least 80 MB, increasing occasionally to 1GB but dropping back to 80 MB within 5 minutes.

Why are WAL files retained?

If you have more than max_wal_size amount of WAL files, it is simply because Postgres still needs them. It takes a bit of digging to find out why. Typically, it is only because of one of three reasons:

- Checkpoints: The primary reason for WAL files even to exist is to safely store data that was changed since the last checkpoint, until Postgres is ready to do the next checkpoint. A WAL file cannot be deleted until it has been included in a checkpoint.

- Replication: If a WAL file is known to contain data required for a replication happening via a replication slot, it cannot be deleted. The setting of

wal_keep_sizecovers the case of replication happening via other means. - WAL Archiving: If archiving is enabled and a WAL file has not been successfully archived yet, it cannot be deleted.

Let’s have a deeper look at each case.

Checkpoints

The settings checkpoint_timeout (default of 5 minutes) and max_wal_size (default of 1 GB) determine when checkpoints occur, for the most part. Typically the checkpoint operation starts when the total size of WAL files have reached max_wal_size, or when checkpoint_timeout duration has elapsed, whichever happens earlier. Checkpoints can also be performed using the CHECKPOINT SQL command.

If both these values are set too high for typical workload, checkpoints can occur too infrequently. This results in a build up of WAL files.

Build up can also occur if there is a sudden burst of write-heavy traffic, and a set of fresh WAL files pile up until the next checkpoint occurs.

👉 If WAL files are building up and you’ve ruled out the other two reasons, then you can try reducing either or both of these parameters to cause checkpoints to occur more frequently. Note that checkpoints are disk I/O heavy, and more frequent checkpoints mean that more burst I/O bandwidth is required.

Replication

The basis of both logical and physical replication in Postgres is the sequential change log in the form of WAL files. If a server knows that a logical or physical replication client needs a particular WAL file, it is not deleted.

In other words, a lagging replica or a slow logical replication consumer can cause WAL file buildup.

Replication via Replication Slots

Replication slots provide a mechanism for the primary server to keep track of how far a consumer has read the WAL file sequence. Typically, a physical or logical replication client will create a replication slot, and regularly provide feedback to the primary server about how far it has consumed the change log sequence.

The pg_replication_slots system view provides the list of all replication slots on the server. Here is the information about a single slot (note that the ~ represents NULL.)

postgres=# select * from pg_replication_slots where slot_name = 'slot_standby2';

-[ RECORD 1 ]-------+--------------

slot_name | slot_standby2

plugin | ~

slot_type | physical

datoid | ~

database | ~

temporary | f

active | t

active_pid | 996746

xmin | ~

catalog_xmin | ~

restart_lsn | 1/48000060

confirmed_flush_lsn | ~

wal_status | reserved

safe_wal_size | ~

two_phase | f

conflicting | ~

The interesting fields here are active and wal_status. The output shows that there is an active WAL sender process shipping log files to a standby server. The wal_status of reserved is what you want – it says that the WAL files needed by this slot is within the limit of max(max_wal_size, wal_keep_size) . (wal_keep_size is described in the next section.)

If for some reason our standby gets disconnected or goes down, it becomes inactive and active becomes false:

postgres=# select * from pg_replication_slots where slot_name = 'slot_standby2';

-[ RECORD 1 ]-------+--------------

slot_name | slot_standby2

plugin | ~

slot_type | physical

datoid | ~

database | ~

temporary | f

active | f

active_pid | ~

xmin | ~

catalog_xmin | ~

restart_lsn | 1/48000060

confirmed_flush_lsn | ~

wal_status | reserved

safe_wal_size | ~

two_phase | f

conflicting | ~

The wal_status is still reserved, but then the WAL files start getting retained and eventually exceed the limit set by the other parameters. The wal_status then becomes extended:

postgres=# select * from pg_replication_slots where slot_name = 'slot_standby2';

-[ RECORD 1 ]-------+--------------

slot_name | slot_standby2

plugin | ~

slot_type | physical

datoid | ~

database | ~

temporary | f

active | f

active_pid | ~

xmin | ~

catalog_xmin | ~

restart_lsn | 1/48000060

confirmed_flush_lsn | ~

wal_status | extended

safe_wal_size | ~

two_phase | f

conflicting | ~

👉 So if you have a slot with wal_status as extended and the WAL files add up to more than the maximum of max_wal_size and wal_keep_size, then the lagging (or dead) standby behind this slot is the reason for the WAL file buildup.

Note that active flag does not have to be false for the wal_status to become extended, but it is being false will indicate a dead replica, and true will indicate a lagging replica.

The solution is of course to fix the standby replica, or get rid of the slot if the replica is no longer needed. The slot can be removed with pg_drop_replication_slot()

Automatically dropping lagging standbys

Prior to PostgreSQL v13, an inactive or chronically lagging standby could cause an interminable build up of WAL files on the primary server and eventually take it down by exhausting disk space. It is usually desirable to kill a bad standby rather than having it kill a primary. To this end, the setting max_slot_wal_keep_size was introduced in v13.

This sets a limit on the maximum size of WAL files (unit of bytes) that a replication slot is allowed to cause the retention of. If the slot requires the retention of WAL files higher than this limit, then the slot is set to wal_status of unreserved. From there, it can either go back to a normal state of reserved or extended, or can be lost which indicates it is not possible to continue replication on the slot.

To illustrate, assume max_wal_size is 1GB and max_slot_wal_keep_size is 5GB. As long as a slot’s WAL file requirement is within 1GB, it’s wal_status remains reserved. Once it exceeds 1GB but is less than 5GB it will be extended. In this state, Postgres will is still committed to retain the necessary WAL files.

Once it exceeds 5GB though, it’s status changes to unreserved. Postgres makes no effort to keep more than 5GB’s worth of WAL files around. It does not actively delete the older ones though, so there is still a window where the standby can catch up and get it’s status changed to extended or even reserved. If it does not, though, Postgres will eventually mercilessly delete the WAL files that it knows that the slot does require to continue replication, and set the status to lost to commemorate this event. There is no coming back for lost slots.

Physical Replication without Slots

In Postgres, physical replication can be setup between a primary and one or more standbys without using replication slots. There are two ways to do this:

- setup streaming replication without using replication slots

- log shipping

Using replication without slots is not so common anymore, but is valid and occasionally useful.

In such cases, the primary server has no idea how far the standbys have consumed the WAL file sequence. It is possible to instruct the primary to not delete a WAL file as long as checkpoint is complete, but keep it around for a while to allow the standbys to catch up. Rather than increase max_wal_size (which will affect checkpointer performance), you can use the setting wal_keep_size. Postgres will simply retain up to wal_keep_size amount of WAL files if this is set to a non-zero value.

👉 If you have a non-zero setting for wal_keep_size and WAL files keep building up to this value, this can be the reason. If the wal_keep_size setting can be reduced based on current deployment status, consider doing so.

WAL Archiving

In PostgreSQL, WAL files can be archived and restored for incremental backup and point-in-time recovery. This is called WAL archiving in Postgres jargon.

With WAL archiving, the Postgres server will invoke a user-supplied script or dynamic library whenever a WAL file is created. The script or library function is then supposed to copy this file to a safe, off-server location, like an NFS volume or S3 bucket. These files can then be restored into a recovery-mode server later on as required.

The catch here is that if the archival script/library fails, the Postgres server will continually retry archiving the same WAL file forever. There simply is no mechanism to tell Postgres to do anything else in case of failures.

👉 When WAL archiving is enabled, WAL files cannot be deleted until successfully archived. A broken or continually failing WAL archiver script can be the culprit if the WAL files pile up.

To check the current number of WAL files that are ready for archiving, you can check the count of *.ready files in the pg_wal/archive_status subdirectory:

$ ls -n pg_wal/archive_status/

total 0

-rw------- 1 1000 1000 0 Mar 28 11:40 000000010000000100000081.done

-rw------- 1 1000 1000 0 Mar 28 11:43 000000010000000100000082.done

-rw------- 1 1000 1000 0 Mar 28 11:43 000000010000000100000083.done

-rw------- 1 1000 1000 0 Mar 28 11:43 000000010000000100000084.done

-rw------- 1 1000 1000 0 Mar 28 11:43 000000010000000100000085.done

-rw------- 1 1000 1000 0 Mar 28 11:43 000000010000000100000086.done

-rw------- 1 1000 1000 0 Mar 28 11:43 000000010000000100000087.done

-rw------- 1 1000 1000 0 Mar 28 11:43 000000010000000100000088.ready

-rw------- 1 1000 1000 0 Mar 28 11:43 000000010000000100000089.ready

-rw------- 1 1000 1000 0 Mar 28 11:43 00000001000000010000008A.ready

-rw------- 1 1000 1000 0 Mar 28 11:44 00000001000000010000008B.ready

-rw------- 1 1000 1000 0 Mar 28 11:44 00000001000000010000008C.ready

-rw------- 1 1000 1000 0 Mar 28 11:44 00000001000000010000008D.ready

-rw------- 1 1000 1000 0 Mar 28 11:44 00000001000000010000008E.ready

-rw------- 1 1000 1000 0 Mar 28 11:44 00000001000000010000008F.ready

-rw------- 1 1000 1000 0 Mar 28 11:44 000000010000000100000090.ready

-rw------- 1 1000 1000 0 Mar 28 11:44 000000010000000100000091.ready

-rw------- 1 1000 1000 0 Mar 28 11:44 000000010000000100000092.ready

-rw------- 1 1000 1000 0 Mar 28 11:44 000000010000000100000093.ready

-rw------- 1 1000 1000 0 Mar 28 11:45 000000010000000100000094.ready

-rw------- 1 1000 1000 0 Mar 28 11:45 000000010000000100000095.ready

-rw------- 1 1000 1000 0 Mar 28 11:45 000000010000000100000096.ready

-rw------- 1 1000 1000 0 Mar 28 11:46 000000010000000100000097.ready

Here there are 16 files that are ready to be archived. It is likely that the archival script/library is in the process of archiving the WAL file 000000010000000100000088.

Tools like pgmetrics can fetch this count even without physical file system access and report it as text or json:

..[snip]..

WAL Files:

WAL Archiving? yes

WAL Files: 25

Ready Files: 16

Archive Rate: 0.17 per min

Last Archived: 28 Mar 2024 11:46:05 AM (8 seconds ago)

Last Failure:

Totals: 264 succeeded, 0 failed

Totals Since: 27 Mar 2024 9:18:28 AM (1 day ago)

..[snip]..

Cheatsheet - Detecting and Preventing WAL File Buildup

Key things to monitor for detecting WAL file build up:

- the disk space usage of the disk where the WAL files live

- replication slots whose

activebecomesfalse - replication slots whose

wal_statusbecomesextendedorunreservedorlost - replication lags of each standby

- failures of WAL archiving script/library

- the number of files that ready to archive

Configuration entries related to WAL file buildup:

checkpoint_timeout(units of time) - start a checkpoint after this much time has elapsed since the last onemax_wal_size(units of bytes) - start checkpointing when there is this much WAL file datawal_keep_size(units of bytes) - keep around this much for non-replication-slot physical replication clients to catch upmax_slot_wal_keep_size(units of bytes) - kill standbys that demand more than this much WAL file retention- background writer and shared buffer cache settings - can affect checkpoint frequency and performance

Structural fixes that may help mitigate WAL file buildup:

- store WAL files on another disk on the same server - data files and WAL files have vastly different access patterns and disk usage, it makes sense to keep them on different disks (with different specs) for performance and management reasons

- use cascaded replication - use one or two physically-replicated fast standbys, to which further non-essential or supplemental consumers can be connected. This keeps the WAL file retention requirements on the primary predictable and manageable.

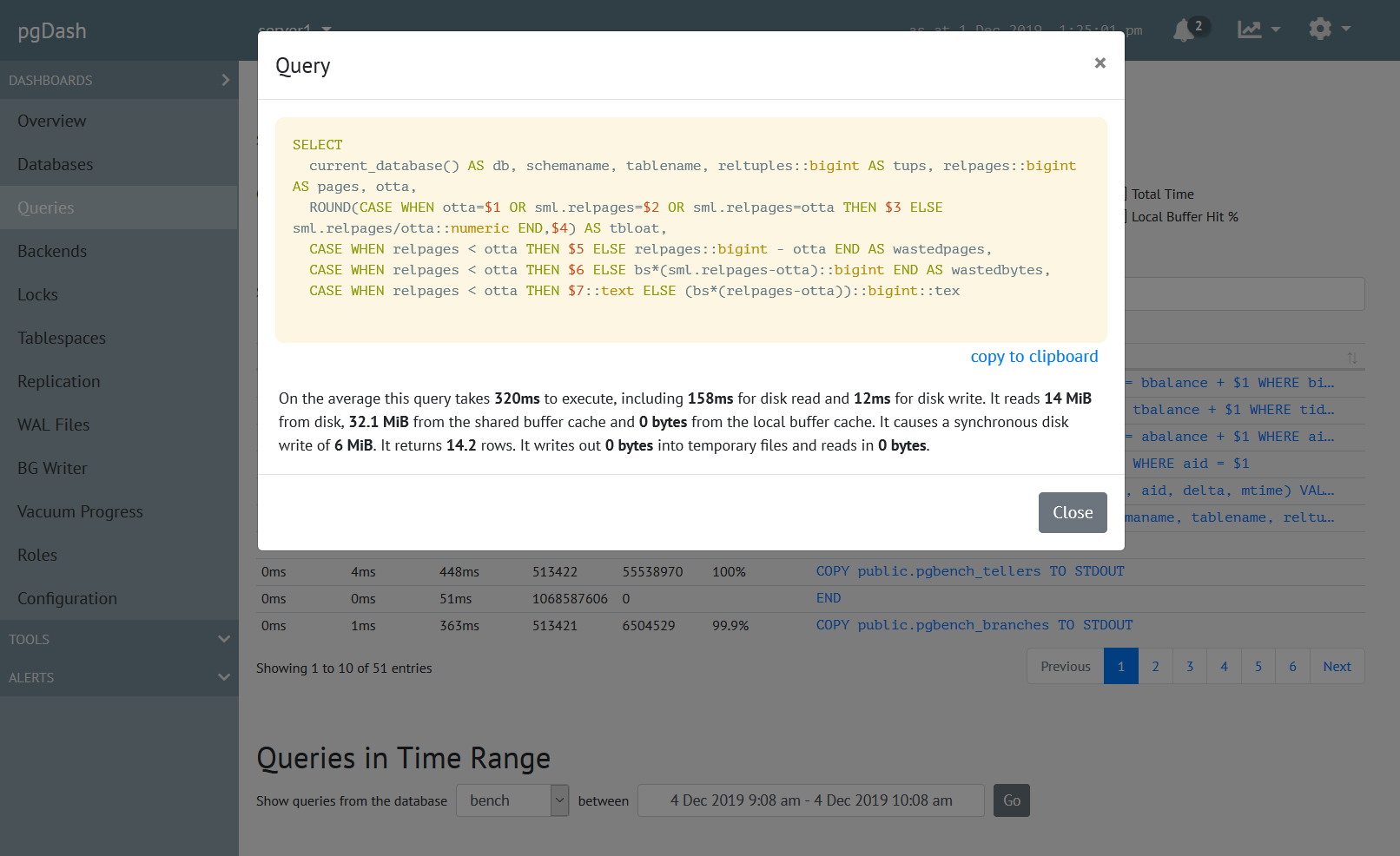

About pgDash

pgDash is a modern, in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics.

pgDash provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, diagnostics, alerting, teams and more. Checkout the features here or signup today for a free trial.